In our first installment of this series, Vulcan’s Mike Parkin looked at the relative hype versus the current reality of ChatGPT as a serious cybersecurity threat. In this installment, Vulcan’s Gal Gonen dives into ways ChatGPT can be used for cyber risk management.

ChatGPT promises an opportunity for managing cyber risk

We asked the bot:

How can chatGPT be useful for cyber risk management?

And got this answer:

ChatGPT can be useful for cyber risk management in several ways, including;

- Risk Assessment: ChatGPT can assist in identifying and assessing potential risks by analyzing large amounts of data, such as network logs and security alerts, to identify patterns and anomalies.

- Risk Mitigation: ChatGPT can help to generate mitigation plans by providing recommendations and automated responses to identified risks.

- Compliance: ChatGPT can be used to help organizations comply with regulations such as HIPAA and PCI-DSS by generating compliance reports and identifying potential compliance violations.

- Risk Monitoring: ChatGPT can be used to monitor potential risks in real time by analyzing network traffic, log files, and security alerts.

- Business Impact Analysis: ChatGPT can be trained to understand and analyze business processes and systems to help organizations understand the impact of a potential cyber incident on their operations and revenue.

- Communication: ChatGPT can assist in communicating risks and risk management decisions to stakeholders, such as employees and investors, by generating reports and alerts that can be easily understood.

If you ask ChatGPT the same question yourself, you may get somewhat different answers as to what it can do in the context of cybersecurity. When we asked the question again ourselves, it returned the following: ChatGPT can be useful for cyber risk management in a variety of ways, including:

- Generating natural language explanations of complex cybersecurity concepts and risks to make them more accessible to non-technical stakeholders.

- Answering frequently asked questions about cybersecurity and providing information on best practices for protecting against cyber threats.

- Assisting in incident response by providing automated suggestions for containing and mitigating a cyber incident.

- Creating and responding to simulated phishing emails to train employees on how to identify and respond to potential phishing attempts.

- Automating the generation of reports and analysis on cyber threats and vulnerabilities.

- Identifying patterns in security log data and alerting security teams to potentially malicious activity.

This time it is much more focused on communication, though it includes also includes some interesting technical suggestions. In both cases it shows the platform’s versatility, if not its consistency.

As a conversational AI, ChatGPT is quite good at sounding like a real human being when it’s interacting with someone. It can even do a passable job of sounding like a specific individual, as a certain found out when he asked ChatGPT to write a commercial in his voice.

But lets takes one of these examples it generated and ask it for more details. How, exactly, would we use ChatGPT to identify patterns in security log data to alert teams about potentially malicious activity?

Lets get more specific

When we ask it:

How could I use ChatGPT to identify patterns in cybersecurity log data?

It responds:

One way to use ChatGPT to identify patterns in cybersecurity log data is to train it on a dataset of log data and then use it to generate summary reports or alerts. Here are a few steps you can take to accomplish this:

- Collect and preprocess a dataset of log data. This may include cleaning and normalizing the data, as well as extracting relevant features for analysis.

- Train ChatGPT on the log data dataset. This can be done by fine-tuning a pre-trained model or training a new model from scratch.

- Use the trained model to generate summary reports or alerts based on the log data. This can be done by inputting a sample of log data and having the model output a summary or alert based on the patterns it has learned from the training data.

- Fine-tune the model to improve its performance by providing more data, and adjust the model’s parameters to get more accurate results.

- Automate the process with a scheduled task or integrate it with existing log management system to get the data in real-time.

You can further improve the model by using other techniques like supervised or unsupervised learning, anomaly detection, and more, depending on the organization’s use case.

We could pursue down each of these points in turn, but it’s clear that ChatGPT can hold our hands through using it in real world scenarios. The challenge with this example is that it’s suggesting we feed it data and train it appropriately, which has its own issues both with potentially sharing our log data with a third party, and having someone with the technical skills to either properly train a new model or adapt an existing one to the organization’s need.

OpenAI’s ChatGPT is an example of what their platform can achieve in the conversational AI space, and how that conversational AI can be used to answer deeper technical questions and deliver more complex technical solutions. But it’s not, itself, a dedicated cybersecurity tool.

But it can be used as one, and it points toward some of the ways this sort of technology can greatly enhance the capabilities of a security operations team.

Our take

We don’t know what the future holds, and how machine learning will develop in the cyber risk field. But we do know one thing, when it comes to cyber risk – risk can’t be taken. No matter how and when we use such tools, we must ask ourselves:

- While conversational AI can add some valuable capabilities, it can also introduce new threats to Security Operations – does it create more noise than it filters out?

- Can it automatically assess risk based on actual business impact/logic?

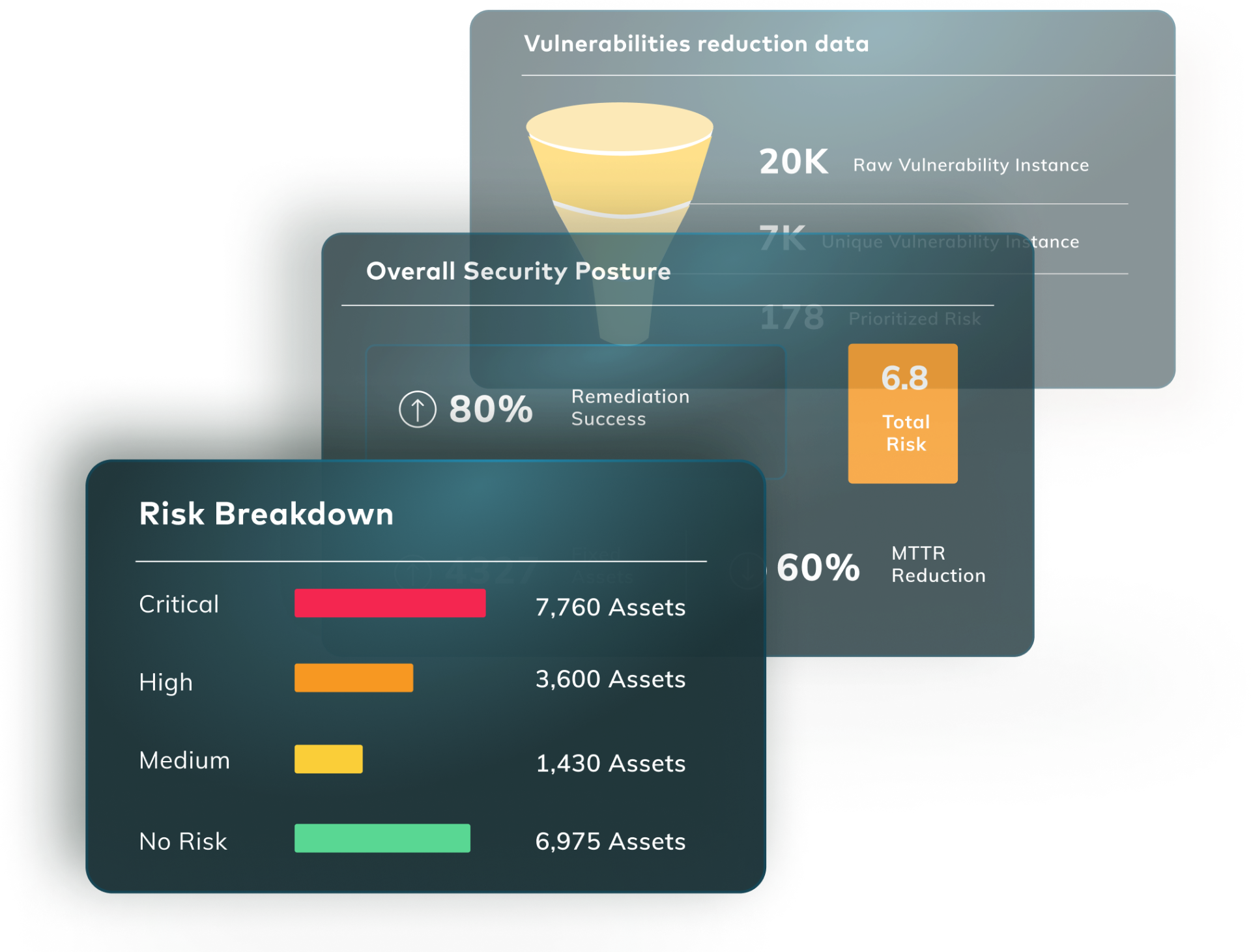

- Can it improve the organization’s security posture across all attack surfaces, including on-premises, cloud instances, and applications?

- Can threat actors gain as much benefit from it as legitimate organizations do?

We went into more on the 1st and 4th questions in the first blog in this series. The 2nd and 3rd questions remain to be answered. Aspects of ChatGPT’s artificial intelligence are already in used in multiple security applications, including some machine learning capabilities in Vulcan Cyber itself.

These defensive capabilities will evolve and improve over time, as cybersecurity vendors like us find the most effective way to use them in day-to-day operations. And we realize that threat actors will also be learning, evolving, and trying to figure out how they can use this sort of artificial intelligence best for their malicious purposes.

Will we see a HAL 900 or a Dystrom M-5 facing off against R. Daneel Olivaw or GERTY? No. But we are already seeing cybersecurity professionals and threat actors using machine learning techniques against each other.

The examples we’ve presented here are just a few of the ways ChatGPT as it stands can be used in cyber risk management. With more specialized development, this kind of advanced expert system and conversational AI can become a powerful tool.

Unfortunately, our adversaries are certainly learning the same lessons.