-

Platform

OVERVIEW

Capabilities

-

Solutions

USE CASES

-

Cyber Risk Hub

LIBRARY

-

Company

GET TO KNOW US

-

Pricing

EPSS is showing promising results when it comes to threat intelligence, but there are some caveats. Here's what you need to know.

The Common Vulnerability Scoring System (CVSS) has long been the go-to standard for assessing the severity of security vulnerabilities. However, CVSS scores don’t tell the whole story – they lack the context to evaluate the real-world risk of a vulnerability being exploited.

With new vulnerabilities discovered daily, organizations need a more comprehensive tool to not only assess severity but also predict the likelihood of exploitation.

Enter the Exploit Prediction Scoring System (EPSS). Unlike CVSS, EPSS acts as boots on the ground, providing both a theoretical severity score and a practical estimate of the probability that a vulnerability will be exploited in the wild.

By incorporating much-needed context, EPSS allows organizations to streamline and prioritize their remediation efforts more efficiently. Still, the EPSS system carries its own unique challenges.

Here are the basics:

EPSS is a machine learning tool that scores vulnerabilities based on their likelihood of being exploited. It provides real-world context missing from CVSS severity ratings alone. While limited in its own right, EPSS offers a data-driven way to prioritize vulnerabilities by exploitation risk when used with other security tools and processes. As it evolves, EPSS is expected to significantly impact vulnerability management strategies.

Want to learn more? Check out our detailed exploration of EPSS usage in the wild.

EPSS is a machine learning-based system that assigns a probability score to software vulnerabilities based on their likelihood of being exploited. The score, a rational number between 0.0 and 1.0, represents the probability of a vulnerability being exploited in the wild within the following 30 days. A score on the higher end of the scale is indicative of a correspondingly higher potential of the vulnerability being exploited.

Pooling data from a diverse range of sources, EPSS forms an all-encompassing repository. This includes the established MITRE’s CVE List , text-based ‘Tags’ that emerge from the CVE description, the references associated with each CVE.

Furthermore, it takes into account exploit code found in platforms such as Metasploit, ExploitDB, and Github —offering a comprehensive picture of the vulnerability landscape.

In addition, EPSS incorporates information from security scanning tools, the base score vectors from CVSS v3, and data pertaining to vendors through CPE. It also integrates real-time updates on actively observed exploits, leveraging insights from Fortinet and AlienVault to maintain an updated and holistic picture of cyber security threats.

The goal of EPSS is to assist the network’s defenders in further prioritizing vulnerability remediation efforts.

When evaluating the success of a threat intelligence system like EPSS, two key metrics come into play: precision and recall. These metrics provide a way to measure the system’s performance and effectiveness in identifying real threats.

Precision measures the accuracy of a threat intelligence system by quantifying the number of correctly identified threats out of the total threats flagged by the system. It represents the percentage of true positives out of all positive predictions.

For example, if a system identifies 100 potential threats, and 80 of them are actual legitimate threats, the system’s precision would be 80%. A higher precision score indicates that the system is making fewer false positive predictions.

Recall, on the other hand, gauges the completeness or comprehensiveness of a threat intelligence system. It quantifies the proportion of genuine threats that the system manages to identify out of the entire pool of existing threats.

For instance, if there are 100 actual threats present, and the system identifies 90 of them, the system’s recall would be 90%. A high recall score suggests that the system is capable of detecting a significant portion of the real threats in the environment.

Both precision and recall are crucial metrics for evaluating the performance of EPSS. A high precision score would indicate that EPSS is accurately identifying vulnerabilities that are likely to be exploited, minimizing false positives and helping organizations focus their efforts on real threats.

Similarly, a high recall score would mean that EPSS is comprehensive in detecting a large percentage of vulnerabilities that pose a genuine risk of exploitation, reducing the chances of missing critical threats.

By analyzing and optimizing EPSS’s precision and recall rates, developers and cyber security professionals can continually improve the system’s effectiveness in predicting exploitation risks and providing actionable intelligence for vulnerability management.

While EPSS predicts the likelihood of exploitation, CVSS measures the inherent severity of vulnerabilities. Both play crucial roles in vulnerability management but serve different purposes.

CVSS scores vulnerabilities based on their characteristics and potential impacts but doesn’t consider real-world threat data. Conversely, EPSS forecasts rely on up-to-the-minute risk intelligence from the CVE repository and empirical data about real-world system attacks.

By integrating both EPSS and CVSS, organizations can gain a broader understanding of the threat environment, enabling more robust vulnerability management strategies. CVSS provides a solid foundation for understanding vulnerability severity, while EPSS adds an additional layer of insight by predicting the likelihood of exploitation.

Here’s an example:

A group of patched vulnerabilities with a remediation strategy based exclusively on CVSS v3.1 and a score threshold set at 8.8 or higher, returned 5.7% precision and recall at 34%.

Meanwhile, a strategy based on the newly released EPSS scores and a threshold of 0.149 or higher, resulted in a 19.9% precision rate and 72.4% recall.

Smaller organizations are less able to achieve a high recall, but can still get a good level of precision. Organizations with more resources can achieve both better recall and precision with EPSS.

Ostensibly, shifting to a prioritization strategy referencing EPSS yields more reliable results than other existing methods like CVSS.

But this comparison to CVSS can be misleading.

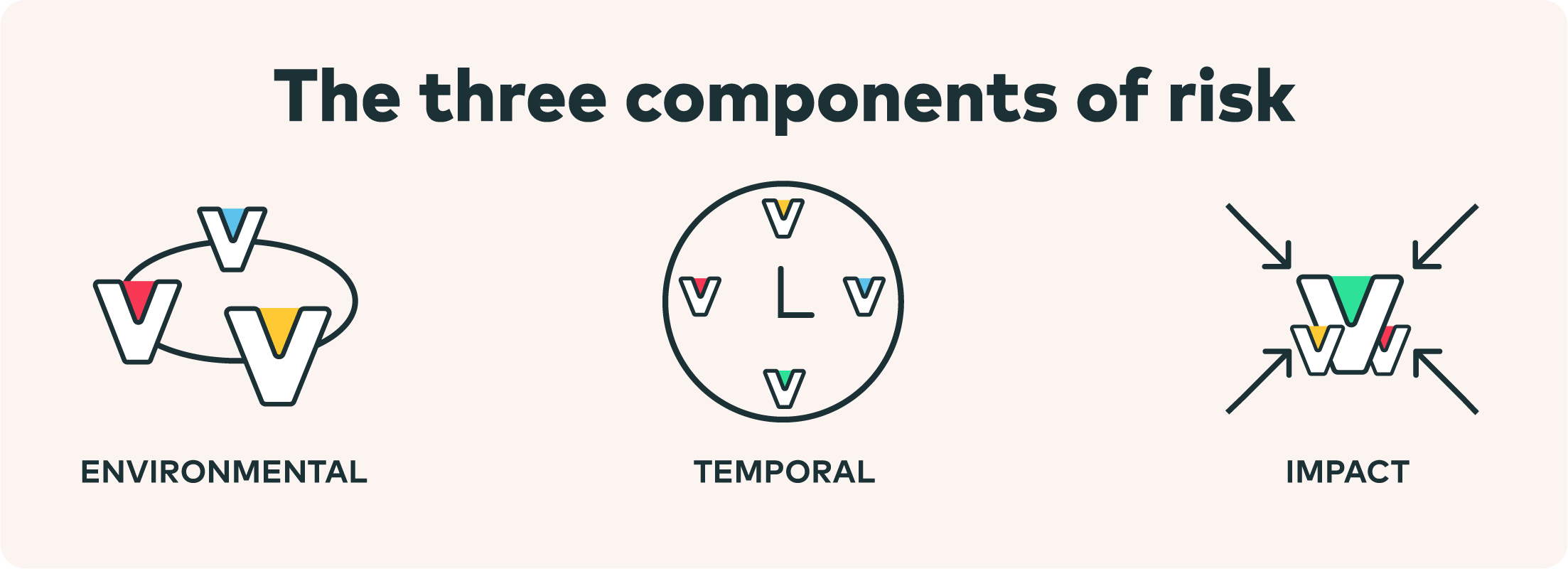

For most of the organizations participating in the research, only the CVSS Base score was considered. The Base group represents the intrinsic qualities of a vulnerability that are constant over time and across user environments. Neither temporal nor environmental metrics are usually used, which renders the assessment somewhat incomplete.

The temporal metric reflects the characteristics of a vulnerability that change over time. For example, this can be the maturity of available exploitation code or the effort required for remediation. Meanwhile. the Environmental group looks at the characteristics of a vulnerability that are unique to a user’s environment.

People don’t configure the Temporal and Environmental bases because of the difficulties involved. The challenges are both on the informational side, but also operational (the lack of tools and the scale of the vulnerabilities).

Both EPSS and CVSS seek to help network defenders better prioritize vulnerability management. FIRST- the organization behind EPSS – states that high severity and high probability vulnerabilities should be the first priority. Accordingly, low-severity and low probability vulnerabilities can be deprioritized.

Still to be addressed are the unclassified vulnerabilities that require additional consideration of a user’s environment, systems, and information. These are most vulnerabilities.

While EPSS is a valuable tool for vulnerability management, it is essential to understand its challenges and limitations to ensure it is used effectively and in the appropriate context.

EPSS is designed specifically to evaluate the likelihood of a vulnerability being exploited within a year of its public disclosure. However, it does not account for various other factors that contribute to overall cyber risk assessment.

For instance, EPSS does not consider specific environmental factors or compensating controls that may be in place within an organization’s network. Additionally, it does not attempt to estimate the potential impact if a vulnerability were to be successfully exploited.

EPSS focuses solely on the threat component of risk, which is just one consideration in a comprehensive, risk-based approach to vulnerability management. As such, EPSS alone should not be treated as the sole indicator of an organization’s cyber risk.

One of the challenges with EPSS is that its scores are not static but rather continuously evolving as new data becomes available. This dynamic nature can sometimes lead to situations where EPSS may not accurately reflect the current threat landscape in a timely manner.

For example, the infamous Log4Shell vulnerability (CVE-2021-44228) initially had a relatively low EPSS score, but it took several days for the score to reach a more accurate level, reflecting the true severity of the threat.

Similarly, there have been instances where EPSS scores have fluctuated significantly within a short period due to the discovery of new exploit code or updated intelligence.

As mentioned earlier, EPSS does not factor in the specific environmental conditions or compensating controls within an organization’s network. This means that vulnerabilities with high EPSS scores may not necessarily pose a significant risk if appropriate mitigations are in place.

Furthermore, EPSS does not provide any analysis or estimation of the potential impact if a vulnerability were to be successfully exploited. Impact assessment is a crucial component of risk management, and EPSS alone cannot address this aspect.

While EPSS is a valuable tool, it is important to recognize situations where concrete evidence of exploitation should take precedence over EPSS scores. The organization behind EPSS, FIRST, acknowledges this limitation and advises that if there is clear evidence of a vulnerability being actively exploited, that information should supersede the EPSS score.

For example, in the case of the Google Chrome zero-day vulnerability (CVE-2022-3075), the vulnerability did not have an EPSS score initially, despite being actively exploited in the wild. In such instances, organizations should rely on other threat intelligence sources and prioritize remediation accordingly, rather than solely relying on EPSS scores.

EPSS represents a significant step forward in the field of vulnerability management. By leveraging machine learning and real-world threat data, EPSS provides a data-driven, dynamic approach to predicting the likelihood of vulnerability exploitation.

While not a silver bullet, EPSS offers several advantages over traditional vulnerability scoring systems and can help organizations prioritize their remediation efforts more effectively.

EPSS plays a crucial role in reshaping the way organizations approach vulnerability management and exposure management. By providing a quantitative measure of exploitation risk, EPSS enables security teams to focus their resources on the most pressing threats, rather than relying solely on severity ratings.

As the volume of vulnerabilities continues to increase, tools like EPSS become increasingly valuable in streamlining the triage and remediation processes. By identifying the vulnerabilities most likely to be actively exploited, EPSS helps organizations stay ahead of emerging threats and respond more effectively to the ever-evolving cyber threat landscape.

While EPSS is a powerful tool, it is essential to recognize that it should be integrated into a comprehensive cyber risk management program. EPSS provides valuable insights into the exploitation likelihood aspect of risk, but it should be complemented by other tools and strategies that address factors such as environmental context, compensating controls, and impact assessment.

By combining EPSS with other vulnerability management tools, threat intelligence sources, and risk assessment frameworks, organizations can gain a more holistic understanding of their overall cyber risk posture.

This integrated approach enables organizations to make informed decisions about resource allocation, prioritization, and mitigation strategies.

As the adoption of EPSS continues to grow, the system’s accuracy and effectiveness are expected to improve further. One of the key drivers of this improvement will be the integration of additional data sources from various vendors and security providers.

By expanding the pool of data used to train the machine learning models, EPSS can gain a more comprehensive understanding of the vulnerability landscape and refine its predictions. This continuous data integration will enable EPSS to adapt to emerging threats and evolving exploitation techniques, ensuring that its scores remain relevant and actionable.

As EPSS matures and its capabilities expand, it is likely to play an increasingly prominent role in the cyber security domain. With its ability to provide data-driven insights into exploitation risks, EPSS has the potential to shape not only vulnerability management practices but also broader security strategies and resource allocation decisions.

Moreover, the principles and methodologies behind EPSS may inspire the development of similar machine learning-based systems for other areas of cyber security, such as threat detection, incident response, and risk quantification.

By leveraging the power of data and machine learning, and marrying methodologies like EPSS with an holistic exposure management platform like the Vulcan Cyber ExposureOS, the cyber security industry can continue to evolve and adapt to the ever-changing threat landscape – and enhancing the overall security posture of organizations worldwide.