The ongoing voyages of the Federation Support Ship USS [REDACTED]

First Officer’s log, Terrestrial date, 20230612, Officer of the Deck reporting.

With the “unintended artificial intelligence” issue on the fleet cruiser sorted out, at least for some value of “sorted,” we were able to focus on completing the inspections and remediations to the [REDACTED] herself. Fortunately, as expected, our engineering team had done an excellent job at executing field repairs and the starbase found only a few minor issues that required their attention.

Which put us in a position to offer liberty call for most of the crew before we picked up our next assignment.

Once again, Starfleet was seeing fit to send us out to the edge of Federation space, far from any of the major powers and deep into a region that was almost exclusively filled with smaller colonies, research stations, and the homeworlds of minor species that were not yet ready for contact.

In this case, we would be delivering supplies to a number of these worlds. None of it appeared extraordinarily dangerous, vital, or valuable, though in each case the cargo in question was something that could not be easily replicated on-site. While that raised the “value” equation in each case, and some of the cargo did require special handling, none of it justified dispatching a fast courier or a major fleet asset.

In theory, this would be a piece of cake, to quote the old Earther phrase.

What could possibly go wrong?

Well, that escalated quickly

What happened

After announcing a critical vulnerability in the MOVEit file transfer utility, CISA (Cyber security and Infrastructure Security Agency) has added CVE-2023-34362 to their Known Exploited Vulnerabilities (KEV) catalog with a due date of 23 June, 2023. Threat actors are known to be exploiting this vulnerability in the wild, so any organization that uses the MOVEit software is encouraged to patch as soon as possible.

Why it matters

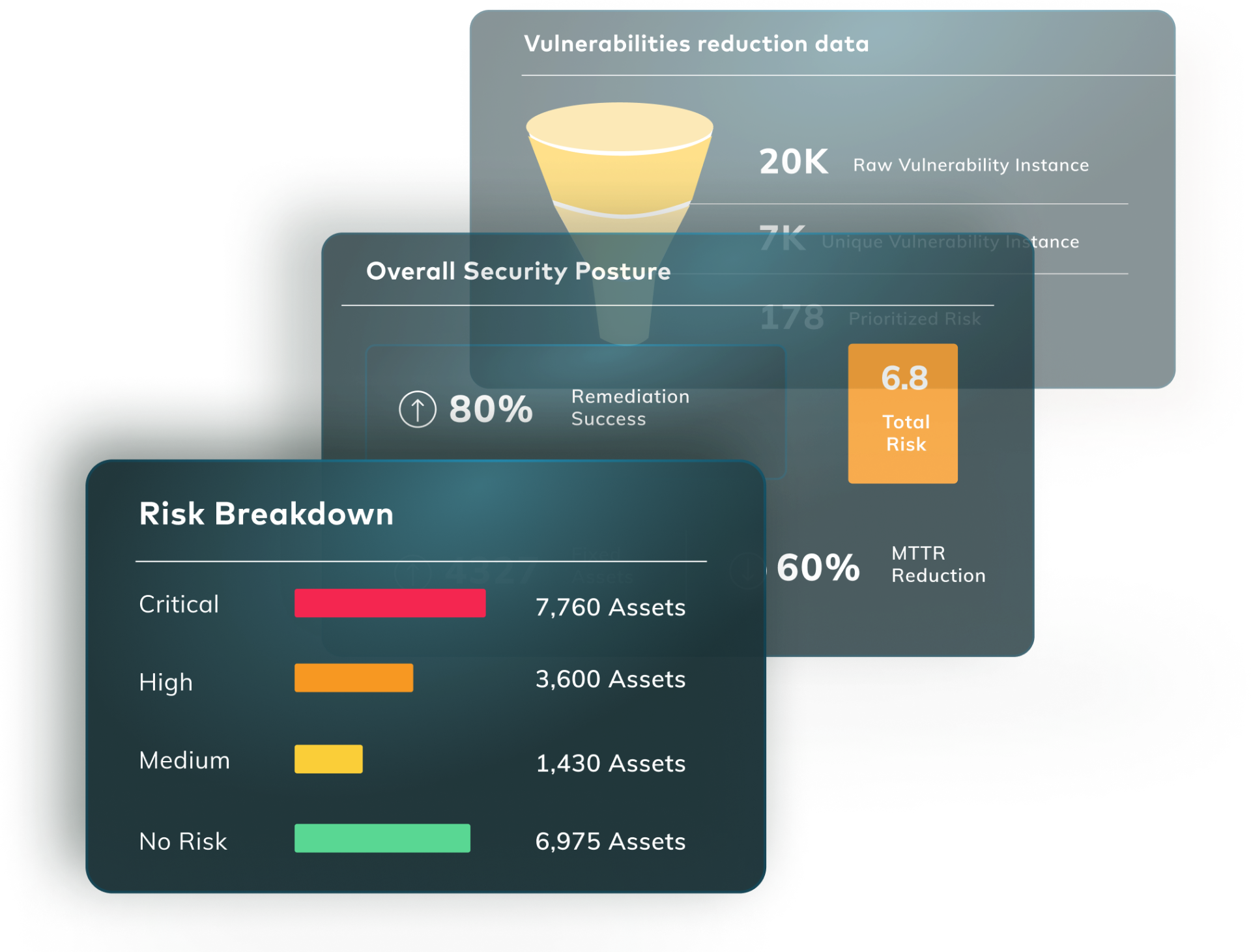

CISA has been adding vulnerabilities to their KEV list at a much faster rate recently, and that should be cause for some concern. Their job is to protect critical infrastructure, and when they add a vulnerability the organizations that follow their mandate are required to rectify the problem. Organizations that don’t have to follow the mandate still should, even if they aren’t required to. The concern is that they are finding more and more exploited vulnerabilities that reach the “do something now” level of concern.

And maybe “concern” isn’t the right word here. Maybe CISA is just catching up to the level of active threats in the wild that cyber security professionals have been dealing with every day for years. Added awareness is good. It’s all about managing risk and balancing our resources, since an “exploited in the wild” vulnerability is always a greater risk than one at the “we found it and told the vendor,” point.

What we said

Our Voyager18 teams has been all over this.

Silicon Angle

https://siliconangle.com/2023/06/04/cisa-warns-critical-vulnerability-moveit-file-transfer-software/

Who knew AI could hallucinate?

What happened

Bar Lanyado, working with other members of Vulcan Cyber’s Voyager18 team, has found some interesting behaviors with ChatGPT that he’s calling an AI Package Hallucination. Their blog post explains in detail how “working as designed” can sometimes lead to unintended behaviors.

Why it matters

I’ll let the blog explain things in detail, but the short attention span theater version is that sometimes ChatGPT, and probably other generative AI platforms as well, can recommend packages that don’t actually exist. It’s a phenomena known as an LLM Hallucination, which we call an AI Package Hallucination in this context*. The problem is that a threat actor could create a package that the AI hallucinated and someone who followed the AI’s advice could end up installing a trojan without realizing it.

While there is a real potential here for threat actors to leverage this behavior, especially if they add some social engineering to the mix, it’s not going to be easy for them to use against specific targets. It relies on a victim getting a recommendation from an AI that leads to the previously discovered and purpose-built hallucinated package. And there is a relatively simple defense – which is to ALWAYS vet the packages you download for use in your projects. Full stop. Do not pass go. Do not collect 200 Quatloos.

OpenAI may add some sanity checking to make sure it only recommends “real” packages to deal with the hallucinations, and the code repositories can do their part. But it’s always on developers to do due diligence.

*: A term coined in-house by our team.

What they said

Our research was covered extensively. Take a look:

___________________________________________________________________________________________________________________________

Want to get ahead of the stories?

- Join the conversations as they happen with the Vulcan Cyber community Slack channel

- Try Vulcan Enterprise for 30 days