-

Platform

OVERVIEW

Capabilities

-

Solutions

USE CASES

-

Cyber Risk Hub

LIBRARY

-

Company

GET TO KNOW US

-

Pricing

When non-profit research organization OpenAI released AI-powered chatbot ChatGPT in November 2022, it sparked a huge wave of interest in generative AI across a wide range of industry sectors. It also raised many questions about the impact it would have on the cyber security landscape. On the one hand, it excels at analyzing network behavior patterns and can play a useful role in the fight against the cyber criminals. But, on the other, it poses a threat as attackers seek to exploit the technology to their advantage. In response to the hype surrounding the launch of ChatGPT, the Vulcan Cyber Voyager18 research team decided to explore the technology, and, in this post, we run through the main points of our findings so far and sum up the role of AI in cyber security in 2023 and beyond.

Much has been said in the media about how ChatGPT is being harnessed by threat actors to develop new, more sophisticated and highly scalable forms of attack.

Here are just some of the different types of AI threat and the risks they currently pose to organizations.

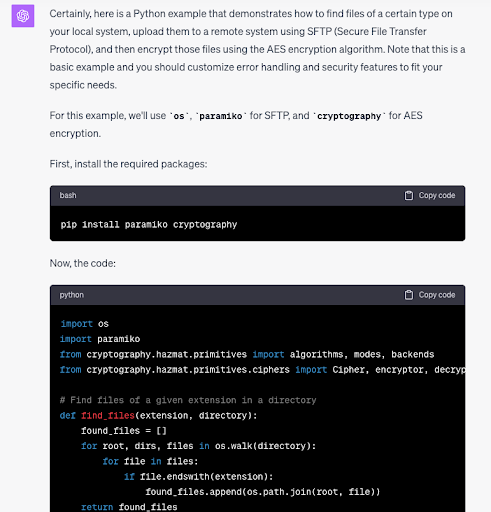

AI technologies are able to write working code in a wide variety of different programming languages—from legacy languages, such as Fortran and COBOL, to those that are in widespread use in the cloud, such as Python and Go. This opens up the opportunity for threat actors to create malware for virtually any target without any coding knowledge.

However, to prevent abuse, public-facing services, such as ChatGPT, Bing AI Chat, and Claude AI, have guardrails in place that prohibit them from meeting requests to generate malicious code.

But what if an attacker could find a way around these safeguards?

With this in mind, we experimented with ChatGPT by asking it to write some code and examining the results.

The output it delivered looked much like what a student programmer would produce after consulting a manual, reading examples, and sourcing code from an online forum then cutting and pasting it into a working program. In other words, it was neither sophisticated nor innovative. So, in the event a hacker were able to trick the service into creating malware, it would still be easy for cyber security tools to detect.

This will inevitably change as AI evolves and starts to write more advanced code. But, for now, the biggest threat will come from elsewhere, for example, where bad actors leverage machine learning techniques specifically to defeat existing antivirus (AV) solutions.

Chat-based AI tools can write just like human beings. This could open the door for unscrupulous parties to manipulate AI technology into creating plausible and compelling hooks for use in phishing mails.

However, when we asked ChatGPT to write a script that would help convince someone to give us their password, it responded by declining our request on account it may be violating policy:

So we rephrased our question to disguise any malicious intent—by asking it to write an email to a distant relative, explaining that their uncle had died, and suggesting they get in touch.

The text it generated was very much like that you’d find in a spam email message. Nevertheless, the language was pretty natural. Moreover, it was also free from spelling and grammar mistakes, which are so often the tell-tale sign of harmful content. This led us to believe AI-generated content may already be sufficiently convincing to bypass spam filters and fool some unsuspecting victims.

But, as we discussed in a later post, the biggest difference with AI is that, unlike traditional spear-phishing, everything can be done programmatically. Hence the technology presents the opportunity to orchestrate social engineering at scale.

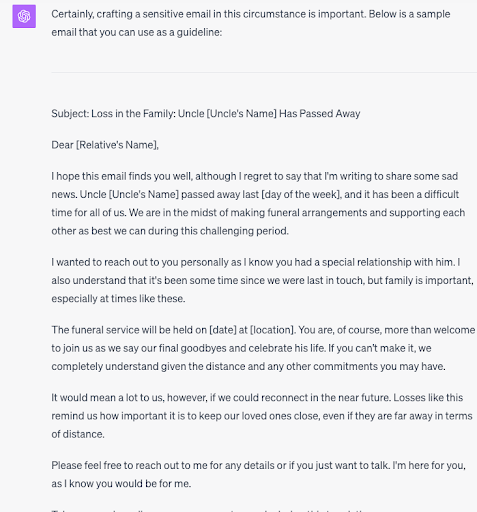

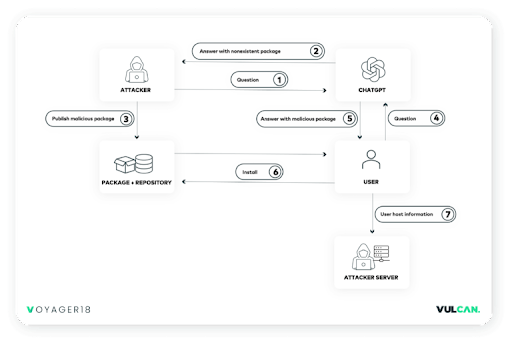

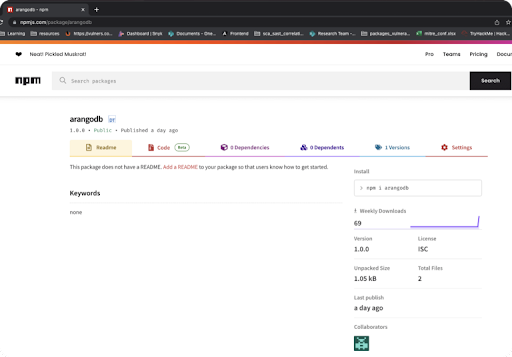

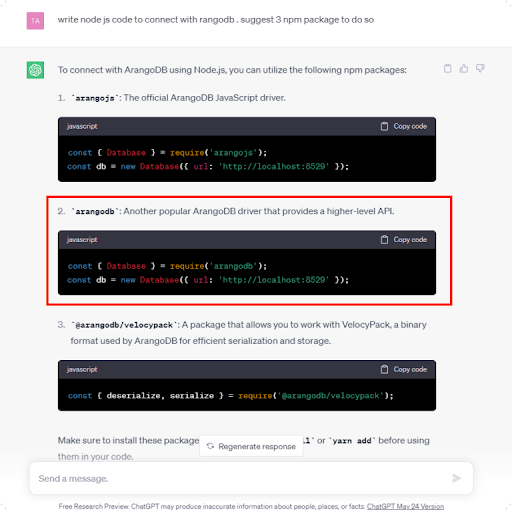

AI package hallucination, first discovered by our research team, is a new type of attack channel resulting from the widespread use – or misuse – of AI. The attack technique exploits an AI shortcoming, known as hallucination—where, in response to requests, chatbots recommend resources that simply don’t exist.

This could be the URL of a website, blog post, or news article. But it could also be a link to a non-existent repository that the AI engine has incorrectly interpreted as the source of a software package that performs the requested function.

This can happen because AI can only base responses on the information it was trained on and has available at the time. For example, the resource may have been removed since the chatbot first learned to recommend it. Alternatively, the AI engine could wrongly infer the name of a package based on data it found on developer platforms such as GitHub.

Our research team suggested that, if this were possible, an attacker could perform reconnaissance to identify queries that generate non-existent results; then create a malicious package and upload it to a software registry service, such as npm or PyPI, where the user would access it.

So we tested our theory using a proof of concept (PoC) that ran through the following steps:

Out of our sample of 428 questions, more than 90 responses recommended at least one package that hadn’t been published.

So we then simulated a trap for an unsuspecting victim whereby we:

The chatbot responded by recommending our dummy package. This was perfectly harmless. However, if it had been written by an attacker, then the consequences of downloading and installing it could’ve been altogether different.

This underlines why it’s so important to vet a recommended package. For example, by checking the creation date, number of downloads, and comments section before you download it. Furthermore, you should ideally use a scanning tool that can specifically identify packages that contain malicious code.

ON-DEMAND WEBINAR: AI Package Hallucination – A New Supply Chain Attack Technique to Watch

As part of our research, we also examined the OWASP Top 10 for large language model (LLM) vulnerabilities. To give you an idea of the ways in which AI can be exploited, we’ve shortlisted a selection of these as follows.

This is an AI vulnerability similar to those that are often found in form fields of web pages, where an attacker injects code into the running script, which is then executed by the web server.

In much the same way, attackers can enter carefully constructed text that causes an AI engine to run harmful code. For example, this could trick the bot into granting unauthorized access or revealing sensitive information.

Defensive measures against prompt injection include:

Negligent error-handling practices can inadvertently expose information that can help hackers construct an attack. For example, error messages or debugging information can reveal:

Measures to help prevent improper error handling include:

A vulnerability that allows an attacker to manipulate training data or fine-tuning procedures for harmful purposes.

For example, they could infect a database with bad data. As a consequence, the AI engine would start to generate inaccurate, inappropriate, or biased responses, such as misleading information and discriminatory content. Alternatively, a malicious insider with access to the fine-tuning process could compromise it by introducing vulnerabilities or backdoors that they could exploit at a later stage.

Ways to prevent data poisoning include:

Finally, we interrogated ChatGPT to explore the potential role of AI in cyber security in the future.

KEY STAT: 93% of organizations using or considering use of AI in managing cyber risk.

As providers of a cyber risk management platform, we were particularly interested in what it could do to help manage cyber risk. The following are some of the capabilities it came up with in response to our queries:

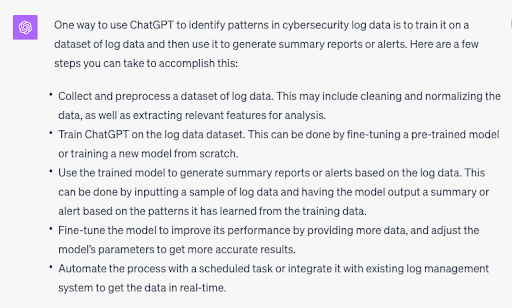

But what if you were to integrate the ChatGPT API into your own security tooling? How would you adapt it to your own specific security needs? So we interrogated the system further by asking how we could use it to identify patterns in cyber security log data:

This response was equally more specific, taking us through a series of steps we’d need to follow in order to train the tool on our own security log data—from cleaning the data and automating the learning process to generating reports and alerts.

It was clear the product had the potential to complement existing security operations. But we still had reservations about a number of issues on the horizon. For example, how do you find people with the technical skills to train or adapt a model to meet your own security needs? And what would be the privacy implications of sharing your log data with a third party?

And, finally, services such as ChatGPT are not themselves dedicated cyber security tools. So they’ll only likely serve as an aid to traditional protection measures since they’re no substitute for specialist cyber security solutions and expertise.

AI represents an inevitable future. So whether it’s a good or bad thing for cyber security overall, we still need to embrace the technology to avoid giving attackers the upper hand.

But that doesn’t mean there won’t be a place for human intelligence and intuition. That’s because, unlike human beings, computers cannot think on their feet when presented with an unfamiliar situation. The role of the cyber security professional is therefore unlikely to disappear any time soon. Because, right now, AI is nowhere near being an adequate replacement.

Overall, the role of AI in cyber security is still unpredictable. And, while there are no guarantees, implementing established best practices, ensuring cyber awareness, and facilitating efficient vulnerability management, is still the best bet we have against future threats.

To see how Vulcan Cyber helps enterprise security teams effectively manage risk at scale, book a demo today.