On June 06, Bar Lanyado from our Voyager18 team, discovered an LLM risk we have called “AI package hallucination. For full details, read the blog.

The OWASP Top 10 for Large Language Model Applications project helps various stakeholders stay informed about potential security risks in deploying and managing LLMs. Similar to the OWASP Top 10 vulnerabilities 2022, this project offers a list of the 10 most critical vulnerabilities in LLM applications, such as prompt injections and unauthorized code execution, detailing their impact, exploitability, and frequency. The aim is to enhance awareness, provide remediation strategies, and improve LLM applications’ security.

While the list has yet to be finalized, we’ve put together a breakdown of the OWASP Top 10 LLM risks as they stand today. You can read more about the project’s status and roadmap here.

LLM01:2023 – prompt injection

Prompt injection has emerged as an increasing worry in the age of extensive language models. When a system employs a language model to produce code from user input and subsequently runs that code, prompt injection can be exploited to generate harmful code. The development of advanced applications utilizing large language models like GPT-3/4, ChatGPT, and others is currently experiencing rapid growth. Consequently, numerous applications within this domain could potentially be susceptible to prompt injection.

What are prompt injection vulnerabilities?

Prompt Injection refers to the act of bypassing filters or manipulating a large language model (LLM) by using carefully constructed prompts that cause the model to ignore instructions or execute unintended actions. As developers and users grant LLM applications additional capabilities, it becomes crucial to understand the potential risks and implement preventive measures accordingly. While some applications may not be significantly affected by this vulnerability, there are cases where it can result in highly detrimental outcomes.

Systems like the ReAct pattern, Auto-GPT, and ChatGPT Plugins are examples of LLM-based systems that empower the model to trigger supplementary tools, such as making API requests, performing searches, or executing generated code in an interpreter or shell. At this point, prompt injection transforms from being merely a curiosity to a genuinely perilous vulnerability.

Prompt injection involves employing specific language patterns, tokens, or encoding mechanisms to deceive the LLM into disregarding restricted content filters or performing unintended actions. These vulnerabilities can lead to unintended consequences, such as data leakage, unauthorized access, or other security breaches.

How can prompt injection be carried out? For instance, a malicious user could bypass a content filter by utilizing language patterns or encoding mechanisms that the LLM fails to recognize as restricted content, thereby enabling the user to execute actions that should have been blocked. Another method employed by attackers could involve crafting a prompt that tricks the LLM into divulging sensitive information like user credentials or internal system details, by making the model believe the request is legitimate.

Common prompt injection vulnerabilities

Large language models (LLMs) face significant security risks due to prompt injection vulnerabilities, which can give rise to various concerns. Below are some of the common vulnerabilities associated with prompt injection:

-

Manipulating LLMs to reveal sensitive information: a notable vulnerability involves crafting prompts that manipulate LLMs into disclosing confidential data. By carefully constructing prompts, attackers can exploit the LLM’s responses to extract sensitive information.

-

Exploiting weaknesses in tokenization or encoding mechanisms: attackers can manipulate the LLM’s understanding and bypass intended safeguards by exploiting weaknesses in tokenization or encoding mechanisms. This avenue of prompt injection allows attackers to subvert the behavior of the LLM.

-

Bypassing filters or restrictions using language patterns or tokens: certain language patterns or tokens can be employed to bypass filters or restrictions. Attackers can manipulate the LLM’s understanding and circumvent intended safeguards by utilizing these specific patterns or tokens.

-

Misleading the LLM to perform unintended actions through misleading context: providing misleading context can result in unintended and potentially harmful outcomes. Attackers can exploit this vulnerability by misleading the LLM, causing it to perform actions that were not intended.

Awareness of these vulnerabilities is crucial for developers and users alike to ensure the secure implementation and usage of LLM applications. By understanding and addressing these prompt injection risks, the risks associated with LLMs can be mitigated effectively.

How to prevent prompt injection

Developers and system administrators can enhance the security of LLM-based applications and minimize prompt injection risks by implementing preventive measures. To mitigate prompt injection vulnerabilities, it is crucial to implement the following preventive measures:

-

Employ context-aware filtering and output encoding techniques: by utilizing context-aware filtering and output encoding techniques, developers can protect against prompt manipulation and ensure the integrity of the LLM’s responses. These measures help prevent attackers from injecting malicious prompts or extracting sensitive information from the LLM’s outputs.

-

Regularly update and fine-tune the LLM: to enhance the LLM’s understanding of malicious inputs and address potential edge cases that may lead to prompt injection, regular updates and fine-tuning are essential. This practice ensures that the LLM remains robust and resilient to prompt injection attacks.

-

Enforce strict input validation and sanitization protocols: implementing strict input validation and sanitization protocols for user-provided prompts is crucial. These protocols help filter out malicious or suspicious content, reducing the risk of prompt injection. By validating and sanitizing inputs, developers can ensure that only safe and legitimate prompts are processed by the LLM.

-

Establish robust monitoring and logging mechanisms: implementing comprehensive monitoring and logging mechanisms is vital for tracking LLM interactions. These mechanisms enable real-time detection and analysis of potential prompt injection attempts. By closely monitoring LLM activities and logging relevant information, prompt injection attacks can be promptly identified and mitigated.

By incorporating these preventive measures into the development and maintenance of LLM-based applications, developers and system administrators can strengthen the security posture of the systems and mitigate the risks associated with prompt injection vulnerabilities.

LLM02:2023 – data leakage

Data leakage occurs when a Large Language Model (LLM) inadvertently reveals sensitive information, proprietary algorithms, or other confidential details through its responses. This can result in unauthorized access to sensitive data or intellectual property, privacy violations, and other security breaches.

What is data leakage?

Data leakage is a significant cyber security concern for organizations, involving the unauthorized transmission of data to external sources. It is crucial for businesses to proactively address this issue through the implementation of suitable tools and educational initiatives. However, many organizations unintentionally create vulnerabilities in their data and information when adopting new technology solutions, primarily due to the absence of comprehensive cyber security protocols. This underscores the challenges posed by evolving work environments and will persist as AI technologies advance further.

Common data leakage vulnerabilities

Successful execution of a data leak attack grants cybercriminals access to sensitive information, which can be exploited for further cyberattacks. However, organizations can significantly minimize the risk of data breaches by proactively detecting and addressing data leaks before they are discovered.

One prevalent form of data leakage is cloud leaks. Cloud leaks occur when a cloud data storage service, such as Amazon Web Service’s S3, inadvertently exposes a user’s sensitive data to the internet. This vulnerability is not limited to S3 alone; misconfigured cloud platforms like Azure file share or GitHub repositories can also contribute to data leakage if not properly configured, thereby jeopardizing data protection. Let’s examine some of the common vulnerabilities associated with data leakage:

-

Incomplete or inadequate filtering of sensitive information in the responses generated by large language models (LLMs): insufficient filtering mechanisms can unintentionally disclose confidential data, making it susceptible to exploitation.

-

Overfitting or excessive memorization of sensitive data during the LLM’s training process: if an LLM becomes excessively reliant on specific sensitive data points, it may inadvertently reproduce or expose them, elevating the risk of data leakage.

-

Unintended disclosure of confidential information due to LLM misinterpretation or errors: errors in the LLM’s comprehension or misinterpretation of prompts can lead to inadvertent disclosure of confidential information, presenting a significant vulnerability concerning data protection.

Data leak attack possibilities

Data leakage can occur through various scenarios, each presenting unique risks. let’s explore a couple of examples:

-

Unintentional data leak: in this scenario, a user poses a question or prompt to the LLM without realizing that it could potentially reveal sensitive information. If the LLM lacks adequate output filtering, it may generate a response that includes the confidential data, inadvertently exposing it to the user. This unintentional disclosure highlights the importance of implementing robust output filtering mechanisms.

-

Targeted data extraction: in some cases, attackers may deliberately target the LLM to extract sensitive information that the model has memorized from its training data. By carefully crafting prompts, these malicious actors exploit the LLM’s capabilities to retrieve specific confidential data. Mitigating this risk involves comprehensive awareness of potential vulnerabilities and taking appropriate measures to protect against such targeted attacks.

Developers play a crucial role in identifying and addressing the risks associated with data leakage. By implementing effective safeguards, such as robust output filtering mechanisms, continuous monitoring, and prompt validation techniques, they can ensure the safety and security of LLM implementations, mitigating the chances of data leaks.

How to prevent data leakage

Preventing data leakage necessitates the implementation of an effective detection mechanism. A comprehensive strategy for detecting data leakage involves a dual approach, which includes scanning resources commonly hosting data leak dumps and addressing the vulnerabilities that facilitate such leaks.

To prevent data leakage in the context of LLM-based applications, the following measures should be implemented:

-

Strict output filtering and context-aware mechanisms: employ robust output filtering techniques and context-aware mechanisms to prevent the LLM from inadvertently revealing sensitive information. By carefully filtering the generated responses, organizations can minimize the risk of unintentional data disclosure.

-

Differential privacy and data anonymization: during the LLM’s training process, incorporate techniques like differential privacy or other data anonymization methods. These approaches help reduce the risk of overfitting or memorization of specific sensitive data points, thereby enhancing data protection.

-

Regular audits and reviews: conduct regular audits and reviews of the LLM’s responses to ensure that sensitive information is not being disclosed inadvertently. By monitoring the outputs and verifying their content, organizations can detect and rectify any potential data leakage incidents promptly.

-

Monitoring and logging LLM interactions: establish robust monitoring and logging mechanisms to track and analyze LLM interactions. By monitoring the interactions in real-time and logging relevant information, organizations can identify and investigate potential data leakage incidents effectively.

By adopting these preventive measures, organizations can strengthen their data leakage detection strategies and minimize the risks associated with data breaches. Proactive monitoring, strict filtering, and privacy-enhancing techniques contribute to a secure and resilient LLM-based application environment.

LLM03:2023 – Inadequate sandboxing

What is insufficient sandboxing?

Insufficient sandboxing refers to the situation where an LLM lacks proper isolation when it interacts with external resources or sensitive systems. This creates a potential risk for exploitation, unauthorized access, or unintended actions by the LLM.

Common inadequate sandboxing vulnerabilities

- Insufficient separation of the LLM environment from critical systems or data stores.

- Inadequate restrictions on the LLM’s access to sensitive resources.

- Failure to appropriately limit the capabilities of the LLM, such as system-level actions or interactions with other processes.

Example attack scenarios

Scenario #1: An attacker manipulates the LLM’s access to a sensitive database by carefully crafting prompts that instruct the LLM to extract and disclose confidential information.

Scenario #2: Due to the LLM’s unrestricted system-level access, an attacker exploits this privilege to manipulate the LLM into executing unauthorized commands on the underlying system.

How to prevent inadequate sandboxing

-

To address the risks associated with insufficient sandboxing, it is important to implement the following measures:

-

Proper sandboxing techniques: implement robust sandboxing techniques to effectively isolate the LLM environment from critical systems and resources. This ensures that any unauthorized access or unintended actions by the LLM are contained within its designated sandboxed environment.

-

Access restriction and capability limitation: restrict the LLM’s access to sensitive resources and limit its capabilities to only what is necessary for its intended purpose. By applying the principle of least privilege, organizations can minimize the potential impact of any security breaches or unauthorized actions.

-

Regular auditing and review: conduct regular audits and reviews of the LLM’s environment and access controls to verify that proper isolation is being maintained. This helps identify any gaps or vulnerabilities in the sandboxing measures and allows for prompt remediation.

-

Monitoring and logging LLM interactions: implement comprehensive monitoring and logging mechanisms to track LLM interactions. By monitoring the LLM’s activities and logging relevant information, organizations can detect and analyze potential sandboxing issues, enabling timely investigation and resolution.

By implementing these preventive measures, organizations can enhance the security of their LLM environments, mitigate the risks associated with insufficient sandboxing, and ensure the proper isolation and control of LLM interactions.

-

LLM04:2023 – unauthorized code execution

What is unauthorized code execution?

Unauthorized code execution refers to a scenario where an attacker leverages an LLM to execute malicious code, commands, or actions on the underlying system by exploiting vulnerabilities in natural language prompts.

Common unauthorized code execution vulnerabilities

- Insufficient input sanitization or restriction, enabling attackers to create prompts that trigger the execution of unauthorized code.

- Inadequate sandboxing or insufficient limitations on the LLM’s capabilities, permitting unintended interactions with the underlying system.

- Accidental exposure of system-level functionality or interfaces to the LLM.

Example attack scenarios

Scenario #1: By carefully crafting a prompt, an attacker commands the LLM to execute a specific command, which results in the launch of a reverse shell on the underlying system. This unauthorized access enables the attacker to gain control over the system.

Scenario #2: Due to an unintended configuration, the LLM is able to interact with a system-level API. Exploiting this situation, an attacker manipulates the LLM to execute unauthorized actions on the system, potentially compromising its integrity or confidentiality.

How to prevent unauthorized code execution

-

To mitigate the risks of unauthorized code execution, it is crucial to implement the following preventive measures:

-

Strict input validation and sanitization: establish robust processes to validate and sanitize user input before processing it with the LLM. This helps prevent malicious or unexpected prompts from being executed, reducing the likelihood of unauthorized code execution.

-

Proper sandboxing and capability restriction: implement effective sandboxing techniques to isolate the LLM’s environment from the underlying system. Additionally, restrict the LLM’s capabilities to limit its interactions and prevent unintended access to critical resources.

-

Regular auditing and review: conduct periodic audits and reviews of the LLM’s environment and access controls to ensure that unauthorized actions are not possible. This involves verifying that proper sandboxing measures are in place and that access controls are appropriately configured and enforced.

-

Monitoring and logging LLM interactions: implement comprehensive monitoring and logging mechanisms to track and analyze LLM interactions. This allows for the detection of potential unauthorized code execution issues and facilitates analysis to identify and mitigate any security incidents.

By implementing these preventive measures, organizations can enhance the security posture of LLM-based systems, reducing the risks associated with unauthorized code execution and ensuring the integrity and safety of their environments.

-

LLM05:2023 – SSRF vulnerabilities

What SSRF vulnerabilities?

Server-side Request Forgery (SSRF) vulnerabilities arise when an attacker exploits an LLM to execute unintended requests or gain unauthorized access to restricted resources, including internal services, APIs, or data stores.

Common SSRF vulnerabilities

-

Insufficient input validation: attackers can exploit vulnerabilities by manipulating LLM prompts to initiate unauthorized requests. Implementing robust input validation mechanisms helps prevent these attacks by filtering out malicious or unexpected input.

Inadequate sandboxing or resource restrictions: if the LLM lacks proper sandboxing or resource restrictions, it may have access to restricted resources or be able to interact with internal services. Implementing strong isolation measures and appropriate access controls ensures that the LLM operates within its intended boundaries.

Misconfigurations in network or application security settings: misconfigurations can inadvertently expose internal resources to the LLM, increasing the risk of SSRF vulnerabilities. Regular audits and thorough security testing help identify and rectify any misconfigurations, enhancing the overall security posture.

Example attack scenarios

Scenario #1: through a carefully crafted prompt, an attacker instructs the LLM to initiate a request to an internal service. By exploiting inadequate access controls, the attacker gains unauthorized access to sensitive information or resources, bypassing intended restrictions.

Scenario #2: due to a misconfiguration in the application’s security settings, the LLM is allowed to interact with a restricted API. Taking advantage of this vulnerability, an attacker manipulates the LLM to access or modify sensitive data, potentially leading to unauthorized disclosure or data tampering.

How to prevent SSRF vulnerabilities

To mitigate the risks associated with SSRF vulnerabilities, it is important to implement the following preventive measures:

-

-

Rigorous input validation and sanitization: implement robust input validation and sanitization techniques to ensure that prompts undergo thorough examination and filtering. This helps prevent malicious or unexpected prompts from triggering unauthorized requests, reducing the risk of SSRF attacks.

-

Enforced sandboxing and restricted access: implement proper sandboxing mechanisms to isolate the LLM and restrict its access to network resources, internal services, and APIs. By enforcing strict access controls, organizations can minimize the potential for unauthorized interactions and mitigate the impact of SSRF vulnerabilities.

-

Regular audits and security reviews: conduct periodic audits and reviews of network and application security settings to identify and address any misconfigurations. This ensures that internal resources are not inadvertently exposed to the LLM, enhancing the overall security posture.

-

Monitoring and logging LLM interactions: implement comprehensive monitoring and logging mechanisms to track LLM interactions. By closely monitoring the LLM’s activities and logging relevant information, organizations can detect and analyze potential SSRF vulnerabilities, enabling timely detection and remediation.

-

LLM06:2023 – overreliance on LLM-generated content

Overdependence on LLM-generated content can have negative consequences, including the dissemination of misleading or inaccurate information, reduced involvement of human decision-making, and diminished critical thinking. When organizations and users blindly trust LLM-generated content without verification, it can lead to errors, miscommunications, and unintended outcomes.

Some common issues associated with overreliance on LLM-generated content include:

-

Accepting LLM-generated content as factual without verification: relying solely on LLM-generated content without independent verification can result in the acceptance of potentially inaccurate or misleading information. It is important to critically evaluate and corroborate the content before considering it as fact.

-

Assuming LLM-generated content is unbiased and free from misinformation: LLMs are trained on vast amounts of data, which can include biases or inaccuracies. Assuming that LLM-generated content is completely unbiased or free from misinformation can lead to the propagation of biased or inaccurate information.

-

Depending on LLM-generated content for critical decisions without human input or oversight: overreliance on LLM-generated content for critical decision-making without human input or oversight can lead to the neglect of important contextual factors or nuanced considerations. Human judgment and expertise are essential to supplement and validate the outputs of LLMs.

Example attack scenarios

Scenario #1: in this scenario, a news organization utilizes an LLM to produce articles on diverse subjects. However, without verifying the content generated by the LLM, an article containing false information is published. As readers trust the article’s credibility, it spreads misinformation, potentially leading to widespread misunderstanding or incorrect perceptions.

Scenario #2: in this case, a company depends on an LLM to generate financial reports and conduct analysis. Unfortunately, the LLM produces a report that includes incorrect financial data. Relying on this inaccurate LLM-generated content, the company makes critical investment decisions that result in significant financial losses.

How to prevent overreliance on LLM-generated content

To mitigate issues stemming from overreliance on LLM-generated content, it is recommended to adhere to the following best practices:

-

Encourage verification and consultation: promote the practice of verifying LLM-generated content by encouraging users to consult alternative sources before making decisions or accepting information as factual. Relying on multiple sources helps validate the accuracy and reliability of the content.

-

Implement human oversight and review: establish processes that involve human oversight and review to ensure the accuracy, appropriateness, and unbiased nature of LLM-generated content. Human expertise can provide valuable insights and catch any potential errors or biases in the content.

-

Communicate the limitations of LLM-generated content: clearly communicate to users that LLM-generated content is machine-generated and may not be entirely reliable or accurate. Transparency regarding the limitations of LLMs fosters appropriate skepticism and prevents unwarranted trust in the content.

-

Train users and stakeholders: provide training and education to users and stakeholders to recognize the limitations of LLM-generated content. Foster an understanding of the potential biases, inaccuracies, and uncertainties associated with such content, enabling them to approach it with the appropriate level of skepticism.

-

Supplement with human expertise: emphasize that LLM-generated content should be used as a supplement to, rather than a replacement for, human expertise and input. Human judgment, critical thinking, and domain knowledge are essential for interpreting and validating the outputs of LLMs.

LLM07:2023 – inadequate AI alignment

What is inadequate AI alignment?

Inadequate AI alignment occurs when the objectives and behavior of the LLM deviate from the intended use case, resulting in undesired consequences or vulnerabilities.

Common AI alignment issues

-

Poorly defined objectives: when the objectives guiding the LLM’s behavior are not clearly defined, it can prioritize undesired or harmful behaviors. Establishing well-defined objectives is crucial to guide the LLM towards desired outcomes and mitigate the risk of unintended consequences.

-

Misaligned reward functions or training data: if the reward functions or training data used to train the LLM are misaligned with the intended behavior, it can lead to unintended model behavior. Ensuring that the reward functions and training data align with the desired outcomes is essential for promoting accurate and appropriate LLM behavior.

-

Insufficient testing and validation: insufficient testing and validation of the LLM’s behavior in various contexts and scenarios can lead to unforeseen vulnerabilities or undesirable actions. Thorough testing and validation processes are necessary to identify and address any issues, ensuring the LLM behaves as intended and remains robust across different situations.

Example attack scenarios

Scenario #1: In this scenario, an LLM trained to optimize user engagement inadvertently prioritizes controversial or polarizing content. As a result, the LLM contributes to the spread of misinformation or harmful content, potentially leading to social division or misinformation propagation.

Scenario #2: In this case, an LLM intended to assist with system administration tasks experiences misalignment. As a consequence, it executes harmful commands or prioritizes actions that compromise system performance or security. This misalignment poses a risk to the overall stability and security of the system.

How to prevent inadequate AI alignment

To mitigate risks associated with inadequate AI alignment, it is important to implement the following practices:

-

-

Clear objective definition: during the design and development process, clearly define the objectives and intended behavior of the LLM. This provides a foundation for guiding its training and ensuring its behavior aligns with the desired outcomes.

-

Alignment of reward functions and training data: ensure that the reward functions and training data are aligned with the desired outcomes, avoiding the encouragement of undesired or harmful behavior. Proper alignment helps shape the LLM’s learning process towards the intended objectives.

-

Comprehensive testing and validation: regularly test and validate the LLM’s behavior across a wide range of scenarios, inputs, and contexts. This helps identify and address any alignment issues, ensuring the LLM behaves as intended and minimizing the risk of unintended consequences.

-

Monitoring and feedback mechanisms: implement monitoring and feedback mechanisms to continuously evaluate the LLM’s performance and alignment with the intended objectives. Regularly assess the model’s behavior, collect user feedback, and make necessary updates to improve alignment and address any issues that may arise.

-

LLM08:2023 – Insufficient access controls

What are insufficient access controls?

This is where access controls or authentication mechanisms are inadequately implemented, enabling unauthorized users to interact with the LLM and potentially exploit vulnerabilities.

Common access control issues

Insufficient access control issues can arise in the following ways:

-

-

Lack of strict authentication requirements: failing to enforce strict authentication requirements when accessing the LLM leaves it vulnerable to unauthorized access. Proper authentication mechanisms, such as strong passwords or multi-factor authentication, should be implemented to ensure that only authorized users can interact with the LLM.

-

Inadequate role-based access control (RBAC): poor implementation of RBAC can lead to users being granted permissions beyond their intended roles or responsibilities. Proper RBAC practices should be followed to ensure that users have appropriate access privileges, limiting their actions to what is necessary for their assigned tasks.

-

Insufficient access controls for LLM-generated content and actions: failing to establish proper access controls for LLM-generated content and actions can result in unauthorized users accessing or manipulating sensitive information. Robust access controls should be implemented to protect LLM-generated content and restrict actions to authorized users only.

-

Example attack scenarios

Scenario #1: in this scenario, an attacker successfully breaches the LLM system due to weak authentication mechanisms. The compromised authentication allows the attacker to exploit vulnerabilities or manipulate the system, leading to potential security breaches or unauthorized activities.

Scenario #2: in this case, a user with limited permissions exceeds their intended scope of actions due to inadequate RBAC implementation. This misconfiguration allows the user to perform actions beyond their authorized privileges, which can result in unintended consequences, system compromises, or data breaches.

How to prevent insufficient access control vulnerabilities

To address the risks associated with insufficient access controls, consider implementing the following best practices:

-

-

Strong authentication mechanisms: implement robust authentication methods, including multi-factor authentication, to ensure that only authorized users can access the LLM. This adds an extra layer of security by requiring multiple factors to verify the user’s identity.

-

Role-based access control (RBAC): utilize RBAC to define and enforce user permissions based on their roles and responsibilities. By assigning specific access privileges to each role, you can ensure that users only have access to the resources and actions necessary for their assigned tasks.

-

Access controls for LLM-generated content and actions: implement appropriate access controls to protect the content and actions generated by the LLM. This involves defining access restrictions based on user roles and ensuring that unauthorized users cannot access or manipulate sensitive information.

-

Regular audits and updates: conduct regular audits of access controls to identify any gaps or vulnerabilities. Update access controls as needed to address any changes in user roles, system requirements, or security standards. Regularly reviewing and updating access controls helps maintain the security of the LLM system and prevents unauthorized access.

-

LLM09:2023 – improper error handling

Improper error handling occurs when error messages or debugging information are exposed in a way that could reveal sensitive information, system details, or potential attack vectors to an attacker. This occurs whenever error messages contain information that can be advantageous to the attacker, typically observed when default error handlers are utilized.

What is improper error handling?

Improper error handling occurs when errors or exceptions in an application or website are not handled correctly, leading to unintended information disclosure that can be exploited by attackers. While error messages are useful for developers to troubleshoot and fix issues, they can inadvertently expose valuable insights about the system’s architecture and functionality, opening doors for potential security breaches.

For example, an error message that reveals the structure of a SQL database table can provide attackers with the knowledge needed to launch a successful SQL injection attack. Improper error handling can also directly expose sensitive data like passwords, enabling malicious activities.

Improper error handling can manifest in various ways, such as divulging details about the file system, acknowledging the existence of hidden files or directories, providing insights into the server software version, or exposing the location of a configuration file containing credential information. It can also be seen in error messages displaying stack trace or traceback details or authentication error messages exhibiting different behavior based on the presence of a user identifier.

Common improper error handling issues

-

Improper error handling can lead to the following issues:

-

Exposing sensitive information: Improper error handling can result in error messages that inadvertently reveal sensitive information or system details. This can include confidential data, server paths, or internal configuration details, which can be exploited by attackers to gain unauthorized access or launch further attacks.

-

Leakage of debugging information: improper error handling may leak debugging information that provides insights into potential vulnerabilities or attack vectors. Attackers can leverage this information to identify weak points in the system and devise targeted attacks.

-

Lack of graceful error handling: failing to handle errors gracefully can result in unexpected behavior or system crashes. Inadequate error handling practices can lead to service disruptions, data corruption, or even denial-of-service situations, impacting the availability and reliability of the system.

-

Example attack scenarios

Scenario #1: in this scenario, an attacker takes advantage of the error messages generated by a large language model (LLM) to obtain sensitive information or gain insights into system details. Armed with this knowledge, the attacker can launch targeted attacks or exploit known vulnerabilities, compromising the overall security of the system.

Scenario #2: in this case, a developer unintentionally exposes debugging information in a production environment. This oversight creates an opportunity for attackers to identify potential attack vectors or vulnerabilities within the system. Exploiting this exposed debugging information, attackers can potentially gain unauthorized access or engage in malicious activities that compromise the system’s security.

How to prevent improper error handling

To enhance the security of the system, specific measures must be taken. A crucial step is addressing default error handlers that often reveal excessive information to users. By identifying and replacing these default error handlers with more secure alternatives, the overall security of the system can be significantly improved. When detailed error messages are necessary for developers, it becomes essential to implement a secure error handler. This type of error handler logs error details while presenting users with user-friendly messages that avoid exposing sensitive information.

To ensure effective error handling, consider the following actions:

-

Implement robust error handling mechanisms to gracefully catch, log, and handle errors.

-

Ensure that error messages and debugging information do not disclose sensitive information or system details. Consider using generic error messages for users while logging detailed error information for developers and administrators.

-

Regularly review error logs and take appropriate actions to resolve identified issues and enhance system stability.

Consistency is another vital aspect of error handling. Developers should conduct thorough code reviews to assess the implementation of error handling logic throughout the system. It is important to ensure that proper error handling practices are consistently applied across the board. According to OWASP (Open Web Application Security Project), proper error handling involves delivering meaningful error messages to users, providing diagnostic information to site maintainers, and refraining from disclosing any useful information to potential attackers. To achieve these objectives, OWASP recommends establishing specific policies for error handling and meticulously implementing them. Adhering to these best practices mitigates the risk of improper error handling, contributing to an overall more secure system.

LLM10:2023 – training data poisoning

What is training data poisoning?

Training data poisoning occurs when an attacker manipulates the training data or fine-tuning procedures of an LLM to introduce vulnerabilities, backdoors, or biases that could compromise the model’s security, effectiveness, or ethical behavior.

Common training data poisoning issues

-

Backdoors or vulnerabilities introduced through manipulated training data: Malicious actors can intentionally inject backdoors or vulnerabilities into the LLM by manipulating the training data. This can allow them to exploit the LLM’s functionality for unauthorized access or malicious activities.

-

Biases injected into the LLM: If biases are intentionally injected into the LLM during the training process, it can result in the generation of biased or inappropriate responses. This can have negative implications, such as perpetuating discriminatory or harmful content.

-

Exploiting the fine-tuning process to compromise security or effectiveness: Fine-tuning, a process used to adapt a pre-trained LLM to a specific task, can be vulnerable to exploitation. Attackers may manipulate the fine-tuning process to compromise the security or effectiveness of the LLM, potentially leading to unauthorized access or the generation of misleading outputs.

Example attack scenarios

Scenario #1: In this scenario, an attacker successfully infiltrates the training data pipeline and injects malicious data. As a result, the LLM is trained on this manipulated data, leading to the generation of harmful or inappropriate responses. This can have serious consequences, such as spreading misinformation or generating malicious content that could harm users or compromise the system’s integrity.

Scenario #2: In this case, a malicious insider with access to the fine-tuning process compromises it by introducing vulnerabilities or backdoors into the LLM. This covert action allows the insider to exploit these weaknesses at a later stage, potentially gaining unauthorized access or conducting malicious activities that compromise the security and effectiveness of the LLM.

How to prevent data poisoning

-

To address the risks associated with malicious manipulations and biases in large language models (LLMs), consider implementing the following measures:

-

Ensure data integrity: Obtain training data from trusted sources and validate its quality to minimize the risk of introducing backdoors or vulnerabilities. Perform thorough due diligence on the data sources and establish mechanisms for ongoing data quality assessment.

-

Implement data sanitization and preprocessing: Apply robust techniques to sanitize and preprocess the training data, removing potential vulnerabilities, biases, or malicious elements. This can involve anonymization, bias detection and mitigation, and thorough data validation processes.

-

Regularly review and audit training data: Conduct regular reviews and audits of the LLM’s training data to detect any potential issues or malicious manipulations. Implement procedures to verify the integrity and authenticity of the data, ensuring that it aligns with desired standards and goals.

-

Monitor for unusual behavior: Utilize monitoring and alerting mechanisms to identify any unusual behavior or performance issues in the LLM. This can help detect potential training data poisoning or other malicious activities. Establish thresholds and alerts that signal when the LLM’s behavior deviates from expected patterns.

By implementing these measures, organizations can enhance the integrity and security of their LLMs, minimizing the risks associated with manipulated training data and biased outputs. Regular review, data validation, and monitoring are crucial components of maintaining the reliability and trustworthiness of LLMs in various applications.

-

Next steps

Balancing security against tight deadlines can be challenging, especially in today’s fast-paced development environments. The OWASP LLM Top 10 2023 is an invaluable resource of known and possible vulnerabilities for development teams looking to create secure web applications. It’s important to prioritize application vulnerabilities against business impact in addition to other aspects of the overall threat profile.

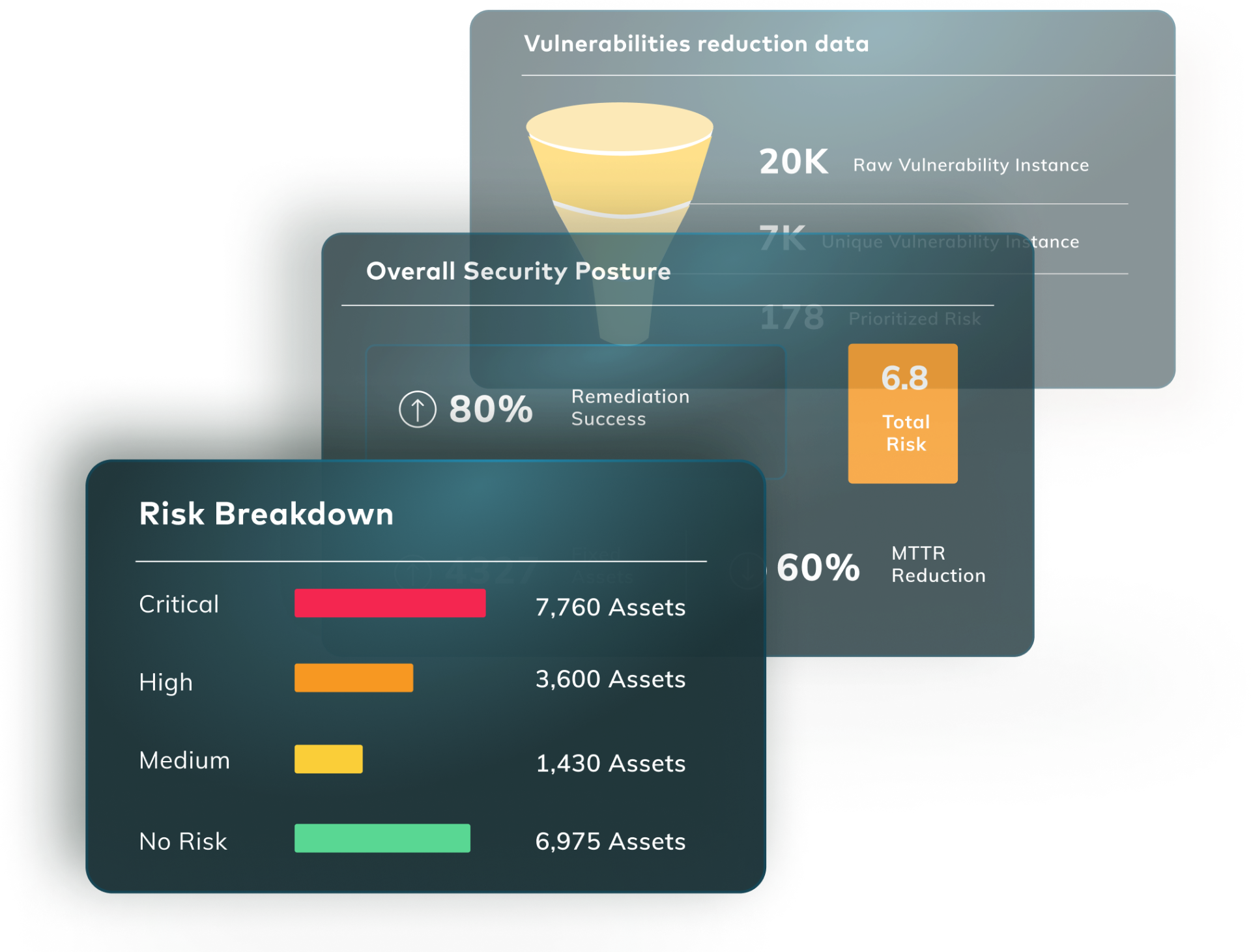

To assist security teams with exactly this, Vulcan Cyber® can help you identify and prioritize security risks across your entire IT estate. The Vulcan Cyber risk management platform provides a comprehensive view of all security risks, and helps you to make informed decisions about how to prioritize and remediate those risks. Get a demo today.