While it offers a feature-rich, flexible, and extensible platform, the downside is that Kubernetes security can be quite challenging. Any misconfigurations in Kubernetes can potentially expose your application data and allow malicious actors to compromise its services.

Key stat: A 2023 survey found that 37% of respondents suffered revenue or customer losses stemming from a security incident involving containers and Kubernetes.

While there are several means for protecting against common threats such as unauthorized access, denial of service attacks, and malware propagation, they do little to prevent more sophisticated attacks like credential theft, privilege escalation, and lateral movement. An effective Kubernetes security strategy must cover all phases of the container and application lifecycle. This requires a thorough and multifaceted approach.

Whether you’re running on-premises or using managed solutions such as AWS EKS, Azure AKS, or GCP GKE, Kubernetes offers various native security mechanisms and tools to address security concerns. However, these often fall short in today’s rapidly changing security landscape. This article explores security fundamentals and best practices your organization can leverage to implement robust security across your Kubernetes environment.

What you’ll learn

- Kubernetes security – the fundamentals

- Securing Kubernetes clusters

- Container and image security best practices

- Securing a cluster

- Securing applications

- Limiting application permissions

- Securing cluster access: User and access management with RBAC

- Securing Kubernetes – final thoughts

Kubernetes security – the fundamentals

Securing Kubernetes has long been a challenge for security teams due to the need to specify which features should be activated by default. Managing cluster component configurations, too, is an arduous task, which can further complicate the delivery and automation of secure workloads. In addition to this, for modern businesses that leverage multiple cloud platforms to deploy workloads, the challenges of multi-cloud security are often unknown and unique.

While Kubernetes offers a number of security features out of the box, the platform does not include all the necessary tools required to build secure microservices architectures. As such, developers must implement certain best practices when building services using containers. The guidelines in this Kubernetes cheat sheet help protect against common vulnerabilities such as insecure configuration management, weak authentication mechanisms, poor logging, and misconfigured firewalls.

Cloud security basics

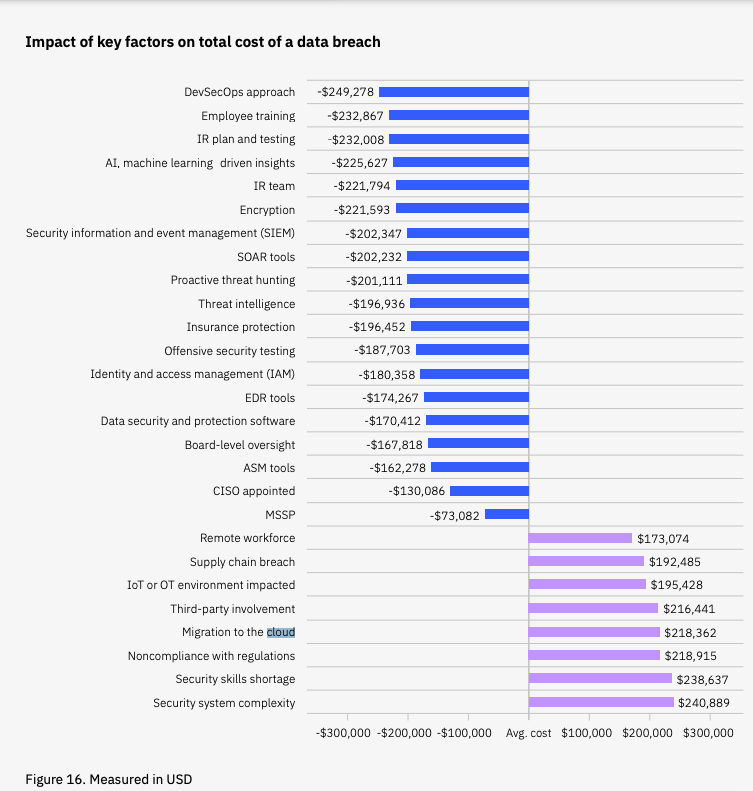

Cloud-native security practices leverage a defense-in-depth approach. This allows security teams to use a layered system to augment controls and establish virtual borders across your organization’s tech stack. Within this framework, each layer in the security model is encompassed by the next outermost layer, which acts as defense in the event of a security breach. And these breaches are far from hypothetical, with IBM reporting migration to the cloud as one of the biggest factors leading data breaches in 2023:

Cloud-native security is based on four fundamental principles:

1. Application security

Also referred to as code security, this principle addresses attack surfaces in the source code of microservices. While securing source code lies outside the scope of a Kubernetes cluster, recommendations for protecting source code for applications running in the orchestrator’s environment include:

- Encrypting traffic between applications using TLS

- Only exposing ports that are crucial for metric collection and communication

- Always scanning third-party libraries for vulnerabilities

- Using static code analysis (SCA) tools for secure coding practices

- Using penetration tests and threat modelling tools to detect attack surfaces before they are exploited

2. Container security

While containers are deployed and run in Kubernetes, they are built and created in container runtimes such as Docker, Containerd, or CRI-O. A container runtime layer exposes various security vulnerabilities. These must be mitigated in order to ensure robust security across the entire ecosystem.

Best practices for securing container runtimes include:

- Enforcing image-signing policies: Outdated container images are often more prone to becoming targets of successful attacks. To mitigate this, it is important to enforce image-signing policies, which establish a trust system for container images and repositories.

- Principle of least privilege: By default, the defined security policy should grant only the minimum user privileges required for managing containers; privilege level should be escalated only if absolutely necessary.

- Container isolation: Security policies should limit use of container runtime engines only to those that include specific classes for container isolation.

3. Cluster security

Cluster security addresses secure configuration of cluster components and securing applications hosted within the cluster. The main cluster components to look out for are the ETCD data store and the API Server. Kubernetes documentation features detailed guidelines for configuring and controlling access of cluster services.

4. Cloud security

This principle involves securing all the components and services of the infrastructure that collectively support the cloud instance. While most of the security responsibilities lie with the cloud service provider—whether AWS, Azure, or GCP—as the customer, your organization must also periodically configure and monitor these services to fit your use case. Automation tools to assess misconfigurations help ensure open vulnerabilities are mitigated and thus improve the platform’s security posture.

How Kubernetes implements security

While securing your workloads is the primary goal, it is also critical to implement security controls throughout the container lifecycle. This involves a combination of approaches for each phase of the application lifecycle, as discussed in this section.

Authentication

Authentication is the process of verifying a user’s identity to ensure only recognized accounts can access a system. Kubernetes uses Service Accounts to manage identities of all users/services allowed to interact with the API Server. Security teams can authenticate HTTP requests to the API server using a number of methods, including:

- Client certificates (TLS encryption)

- Bearer tokens

- Authenticating tokens

- HTTP basic authentication

Authorization

Once a user has been authenticated into a system, the authorization process determines the user’s permissions and level of access to resources. Security teams can create a set of policies used to authorize access requests using any, all, or a combination of the following authorization strategies:

- Role-based access control (RBAC)

- Attribute-based access control (ABAC)

- Node authorization

- Webhook

Image security

Both private and public image registries often contain vulnerabilities. To ensure such vulnerabilities do not make it to production, Kubernetes relies on admission controllers to ensure that only images with strict security controls are deployed. Apart from leveraging admission controllers, it is also important to adopt the following best practices to administer robust image security:

- Build security into the CI/CD pipeline.

- Vet third-party image registries.

- Use minimal base images.

- Opt for private, internal registries.

Network policies

By default, pods accept traffic from any entity and follow non-isolation. Network policies enable teams to specify how pods communicate with other cluster objects and allow traffic flow control at the IP/Port Address level. These policies provide a mechanism for specifying which ports or protocols a pod should be able to use when communicating with other pods within the same namespace. To restrict pods from accepting traffic from any internal/external entities, Kubernetes network policies are used to maintain pod isolation and specify network endpoints the pod can accept traffic from.

Leverage Security Resources: Secrets & ConfigMaps

Secrets and ConfigMaps are used to inject configuration data into containers on startup. Secrets are base64 encoded and are used to store sensitive information, such as credentials and authentication tokens. ConfigMaps, on the other hand, are not base64 encoded and are mostly used to save non-sensitive configuration data, such as environment variables and config files.

Securing Kubernetes clusters

There are various frameworks and best practices for securing a Kubernetes cluster. Discussed below are some of the more popular compliance frameworks offering guidelines on how to secure your Kubernetes cluster.

Kubernetes security & compliance frameworks

Kubernetes security frameworks were developed to provide standardized guidelines for managing security risks based on real-world scenarios. Some of the more prominent Kubernetes security frameworks are discussed as follows.

CIS Kubernetes Benchmark

The Center for Internet Security (CIS) Kubernetes Benchmark enables security teams to harden Kubernetes clusters by providing guidance on how to secure configuration of cluster components. CIS also recommends tools to help your organization assess its current security state and identify areas for improvement. This aids in enabling cluster-wide vulnerability scanning and standardizing efforts to minimize cluster attack surfaces.

Kubernetes MITRE ATT&CK® framework

The MITRE ATT&CK framework for Kubernetes provides a template for threat modeling through the documentation of various tools, tactics, and techniques used for attacks. Based on real-world observations, your organization can leverage the framework to simulate the behavior of an attacker and identify susceptible entry points.

PCI-DSS compliance for containers

The PCI-DSS compliance framework outlines best practices to protect cardholder data in containerized applications. PCI-DSS is a universally recognized standard allowing for the assessment of your applications and the security posture of the underlying platform, thus enhancing privacy and protecting confidential data.

NIST Application Container Security framework

The NIST Application Container Security Guide features a risk management framework for hardening containerized environments and securing containers. The document outlines various security concerns associated with container orchestration and recommends remediation practices for hardened security.

8 Kubernetes security best practices

Securing Kubernetes workloads requires a comprehensive approach to administering security across all layers of the ecosystem. Best practices to secure Kubernetes workloads therefore focus on addressing multiple challenges of platform dependencies and architectural vulnerabilities, as discussed in this section.

1. Use the latest Kubernetes version

As a well-managed CNCF project, Kubernetes is constantly being updated, as vulnerabilities are discovered and patched all the time. Using one of the recent major Kubernetes versions is a must for organizations in order to take advantage of new security features and ensure workloads are not susceptible to recent attack trends.

2. Use role-based access control

RBAC simplifies access control by assigning privileges according to your organizational structure and defined roles. Not only is RBAC recommended for user-level access; but also for administering cluster-wide and namespace-specific permissions.

3. Isolate resources using namespaces

Namespaces are virtual clusters that help organize resources through access control restrictions, network policies, and other crucial security controls. To ensure resources are not susceptible to attacks, namespaces should be used to establish boundaries that make it easier to apply security controls.

4. Separate sensitive workloads

As a best practice, sensitive applications should be run on a dedicated set of nodes to limit the impact of successful attacks. You’ll also want to make sure these nodes are isolated from public-facing services so that even if one node is compromised, the attacker is unable to escalate privileges and gain access to other nodes.

5. Secure ETCD

Due to their importance in the Kubernetes ecosystem, ETCD servers are a common target of attack vectors. It is therefore important to ensure that the ETCD service has adequate security measures in place. All services should be secured with strong authentication mechanisms such as TLS/SSL certificates or using mutual authentication between client and server.

6. Use cluster-wide pod security policies

Your organization should define robust policies to outline how workloads should be run in the cluster. Ensure that your network configuration allows traffic only where necessary, and disable unnecessary ports and protocols that may open susceptible entry points.

7. Keep nodes secure

It’s important to minimize administrative access to nodes, while also controlling network access to ports used by the Kubelet service. It is also recommended to use iptables rules for ingress and egress traffic on the cluster’s public IP address as well as any other external services or applications that may need to connect to the cluster. This will help prevent malicious users from accessing nodes via SSH/RDP connections.

8. Activate audit logging

Audit logs are a set of records that contain information about the events and actions performed by an entity or process on Kubernetes cluster resources. The event data can be used for troubleshooting purposes as well as auditing activities such as security audits. Audit log entries also provide details regarding the identity of the user who initiated each action. It is recommended to use audit logs and monitor them to correlate activity with users across your organization’s network boundaries.

Container and image security best practices

Ensuring your containers are running securely is an important first step in improving the security of your Kubernetes cluster. In this article, we walk you through the steps involved in securing your cluster, including how to configure the relevant security features correctly as well as best practices for protecting your cluster from potential threats.

Rootless container

A rootless container is a container that runs without requiring the host system to have a special user with a UID of 0 (root). This offers several advantages:

- Security: Avoiding execution of the container, as the root user can help reduce the danger of privilege escalation threats. If an attacker successfully takes over the container, they would not have root access to the host system and would only have restricted access to and control over private host resources.

- Isolation: Rootless containers provide an extra barrier of separation from the host system. When several containers operate on the same host, you want to ensure that they don’t interfere with one another or the host system.

- Ease of use: Running containers as a non-root user can make maintaining and deploying containers simpler since you don’t have to worry about giving the container root rights or managing root-level permissions.

Overall, rootless containers can make it simpler to manage and deploy containerized applications while simultaneously enhancing security and isolation.

Headless container

A container that lacks a user interface (UI) or graphical user interface (GUI) is said to be “headless.” It is intended to operate in the background and perform tasks without requesting user input or producing a visible result.

Headless containers are frequently utilized to execute background operations or microservices. For instance, you may host a web server that responds to API requests, a message queue, or a database server within a headless container. You can execute processes in a lightweight, isolated environment without the overhead of a complete GUI by using headless containers. They don’t need the infrastructure or resources required to support a UI. This makes them simpler to deploy and manage.

Since you cannot interact with headless containers directly through a UI, they can be more challenging to diagnose and troubleshoot. To identify the source of the container’s problems, you may in some cases need to rely on debugging tools or log files.

Image scanning

Before being deployed into a production environment, container images are examined for vulnerabilities and other security issues. This procedure is known as “container image scanning.” It is a critical part of secure containerization since it makes sure that the containers you are running do not contain vulnerabilities and that they are in compliance with your organization’s security requirements. You can perform container image scanning using various tools, such as Trivy, Synk, Grype, and Clair—each with its own features and capabilities.

Container image signing

Container image signing is a security process that allows you to add a digital signature to a container image to verify its authenticity and integrity. The publisher’s private key is used to create the signature, which can then be validated using the associated public key.

Container image signing helps to ensure that the image hasn’t been altered or tampered with in any manner. Because it promotes authenticity and integrity, this is significant. There are several tools available for container image signing, including Cosign (Sigstore), Notary, and TUF (developed by the Linux Foundation).

Using a trusted registry

Using policies and role-based access control (RBAC), a trusted registry secures artifacts. It also makes sure that images are inspected for vulnerabilities and are signed off as trusted. Some of the benefits of using a trusted registry include:

- Security: A trusted registry can help ensure the security of the container images being used by verifying the authenticity and integrity of the images. This can help prevent the deployment of tampered or compromised images.

- Compliance: A trusted registry can help ensure compliance with security and regulatory standards by providing an auditable repository for container images.

Collaboration: A trusted registry can facilitate collaboration and container image sharing among developers within an organization. It can provide a location for storing and managing images, making it easier for developers to use the images they need.

Securing a cluster

There are multiple points to consider when securing a Kubernetes cluster. You may need to make additional configurations to your cluster, applications, networks, or even containers to prevent security attacks.

Running secure pods

Kubernetes APIs provide policy constructs, and their dynamic admission control features also permit external policy engines to function as a component of the control plane.

Running secure pods is an important aspect of securing your Kubernetes cluster. There are several best practices you can follow to ensure the security of your pods:

- Run the containers as non-root users: Containers run as the root user by default. This can pose a security risk if the container is compromised. Using a non-root user to run them can help reduce this risk.

- Use container runtime security: Container runtime security features such as AppArmor, SELinux, and seccomp can help to enforce security policies and prevent containers from accessing unauthorized resources.

- Keep containers patched and up to date: Apply patches and updates to your containers on a regular basis to fix known vulnerabilities.

- Limit the resources available to your containers: You can use resource limits and quotas to prevent containers from consuming too many resources, which can help prevent resource exhaustion and potential denial-of-service attacks.

Preventing host access

Users can specify a security context to enable distinct isolation between a container and the host on which it runs. Like privileged processes running on the host, running the containers as root has the same effect, and doing so can lead to serious issues.

In order to prevent this, it is necessary to follow recommended practices for using security contexts:

- Drop root privileges in containers as soon as possible.

- Run containers as non-root whenever possible.

Restricting image registry

There are a number of ways to restrict container image registries:

- Use a private registry: Only authorized users can have access to the images stored in the registry.

- Use imagePullSecrets: imagePullSecrets is a field in a pod’s specification that kubelet can use to authenticate with the registry and pull the specified images.

- Use network policies: Access to the container registry from certain pods or namespaces can be restricted.

- Use admission controllers: An admission controller like the PodSecurityPolicy admission controller can be used to enforce policies on the images that can be used in your cluster.

etcd security

etcd is a distributed key-value store that is used by Kubernetes to store its configuration and the state of the cluster, and is used by various components to coordinate their actions. As etcd stores sensitive configurations and is critical for the operation of the cluster, ensuring that it is well secured is crucial:

- Only authorized clients should be allowed to access the etcd data with TLS certificates for authentication.

- It should be configured to use access control lists (ACLs) to specify which clients are allowed to perform certain actions.

- Communication between etcd and other components in the cluster must be encrypted using TLS.

- Network policies can be used to isolate etcd from the rest of the cluster.

Kubelet security

The kubelet component, which is deployed on every node in a cluster, is responsible for starting and stopping pods on the node as well as maintaining the desired state. It is important to ensure its security so that communication between the kubelet and the Kubernetes API server is secure. This can be done by using a TLS connection, and it ensures that the kubelet is properly secured to protect against unauthorized access or attacks on the cluster.

You need to configure the kubelet to authenticate with the API server using a client certificate to ensure that only authorized kubelets can communicate with it. Kubelets can only perform actions that are allowed by RBAC policies and should be configured to enforce them.

Admission webhooks

Every Kubernetes configuration change—whether made by administrators, end users, extensions, integrated controllers, or external management systems—employs declarative APIs. Following caller authentication and API request authorization, Kubernetes executes defined admission controllers that offer additional controls for how an API request is handled. The various built-in admission controllers in Kubernetes can be enabled or disabled using API server flags.

Kubernetes admission webhooks allow you to enforce custom security policies on your cluster by intercepting requests to the Kubernetes API server and either allowing or denying those requests based on your policies. This can be useful for preventing the deployment of untrusted or malicious code as well as for enforcing other security policies such as resource quotas and access control.

Admission webhooks are implemented as HTTP servers that handle incoming admission requests sent from API servers and return corresponding responses that indicate whether the request should be allowed or denied.

You can configure your admission webhooks to trigger on specific kinds of requests, such as the creation of a new resource or updating of an existing resource. Using admission webhooks adds an extra layer of security to your cluster by ensuring that only trusted and compliant resources are allowed to be deployed. This can help prevent potential security vulnerabilities and ensure the integrity of your cluster.

Securing applications

Applications that interact with external systems such as databases require reading credentials of the external system. This brings us to another important point: securing application secrets.

Kubernetes secret resource RBAC

Limiting access to Kubernetes secrets through RBAC is an important security step to protect sensitive information. By disabling access to a secret resource for certain users using RBAC, you can ensure that only authorized users have access to that resource. This helps to protect sensitive information and prevent unauthorized access to resources.

To disable access to a secret resource for users in Kubernetes using RBAC, you can create a Role or ClusterRole that specifies the permissions you want to grant or deny. For example, you might create a Role that allows users to read and write to the secret resource but not to delete it. You can then bind this Role to a group or user using RoleBinding or ClusterRoleBinding.

Here is an example of a role definition:

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: secret-read

rules:

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get", "watch", "list"]

Secrets Store CSI Driver

Secrets Store CSI Driver uses Container Storage Interface (CSI) to combine external secret stores with Kubernetes volumes. It enables Kubernetes to mount several keys, secrets, and certificates kept in external secrets stores as a volume in their pods. When a volume is bound, all of the data it contains is accessible from inside the container. You can use SecretProviderClass CRD to configure it.

Limiting application permissions

It is important to limit an application’s permissions, as attackers may take advantage of application privileges or permissions to accomplish unauthorized operations in a cluster.

Service accounts for pods

Service accounts (SA) are used to give pods access to the API server and other resources in the cluster. Service accounts are associated with a specific namespace and are used to authenticate pods when they make requests to the API server. There can be several reasons for these requests, including granting pods access to the API server, giving pods access to other resources, and enabling pod-to-pod communication.

Pods can use service accounts to authenticate each other. For example, you might create a service account that has read-only access to a certain secret.

Here is an example of RoleBinding:

apiVersion: v1

kind: ServiceAccount

metadata:

name: service-account

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: secret-reader-binding

subjects:

- kind: ServiceAccount

name: service-account

namespace: default

roleRef:

kind: Role

name: secret-read

apiGroup: rbac.authorization.k8s.io

Network policies

Network policies allow you to specify network communication rules between applications. They offer a way to control inbound and outbound traffic at the pod level and can be used to create isolation between pods or to expose certain services to the outside world.

You can improve the security of your application by:

- Limiting the traffic that is allowed to flow between pods

- Exposing certain services to the public internet while keeping other services private

- Creating isolation between different parts of your application or between different teams within your organization

Securing cluster access: User and access management with RBAC

RBAC is a way to restrict access to computer or network resources according to the user’s roles. Using this approach, you can set up granular rights that specify how a certain user or group of users can interact with any object in the resources.

Consider an example of an access matrix:

- Alice has read and write access to foo but has no access to bar.

- Bob has only read access to bar and has no access to foo.

Creating a Role along with RoleBinding resources allows the use of RBAC in Kubernetes. Roles contain a set of permissions (e.g., read access to a certain resource), and RoleBindings grant those permissions to a user or group of users (e.g., the ”developers” group).

You can also use cluster roles and cluster role bindings to grant permissions clusterwide rather than to a particular namespace.

Here is an example of RBAC with Role and RoleBinding:

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: test

name: pod-reader-writer

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: pod-reader-writer-binding

namespace: test

subjects:

- kind: Group

name: developers

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: pod-reader-writer

apiGroup: rbac.authorization.k8s.io

It’s important to note that RBAC is disabled by default in Kubernetes, so you will need to enable it in your cluster if you want to use it. This can be done by setting the –authorization-mode=RBAC flag when you create your cluster.

Securing Kubernetes – final thoughts

Despite its quick adoption and growing popularity, Kubernetes presents many challenges—prime among them security. One survey found that over 74% of software companies reported they were already running their production workloads using a Kubernetes distribution. According to another survey, 55% of organizations delay production rollouts, citing security as one of the primary concerns.

Provided your platform is correctly configured, Kubernetes provides an excellent foundation for building highly available and scalable containerized apps. By following best practices and frameworks included in our Kubernetes cheat sheet, you’ll be able to implement robust security controls for your Kubernetes clusters.

Give your security teams what they need to get the fix done. The Vulcan Cyber risk management platform—featuring integrations with Kubernetes, containerized environments, and all the major cloud providers—allows you to see your risk across your in one place, so you don’t just keep up; you get ahead.